Software performance and scalability are frequent topics when we talk about application development. A big reason for that is an application's performance and scalability directly affect its success in the market. An application, no matter how good its user interface, won't claim market share if its response time is sluggish.

This is why we spend so much time improving an application's performance and scalability as its user base grows.

Where usual testing practices fail

Fortunately, we have a lot of tools to test software behavior under high-stress conditions. There are also tools to help identify the causes of performance and scalability issues, and other benchmark tools can stress-test systems to provide a relative measure of a system's stability under a high load; however, we run into problems with performance and scale engineering when we try to use these tools to understand the performance of enterprise products. Generally, these products are not single applications; instead they may consist of several different applications interacting with each other to provide a consistent and unified user experience.

We may not get any meaningful data about a product's performance and scalability issues if we test only its individual components. The real numbers can be gathered only when we test the application in real-life scenarios, that is by subjecting the entire enterprise application to a real-life workload.The question becomes: How can we achieve this real-life workload in a test scenario?

Containers to the rescue

The answer is containers. To explain how containers can help us understand a product's performance and scalability, let's look at Puppet, a software configuration management tool, as an example.

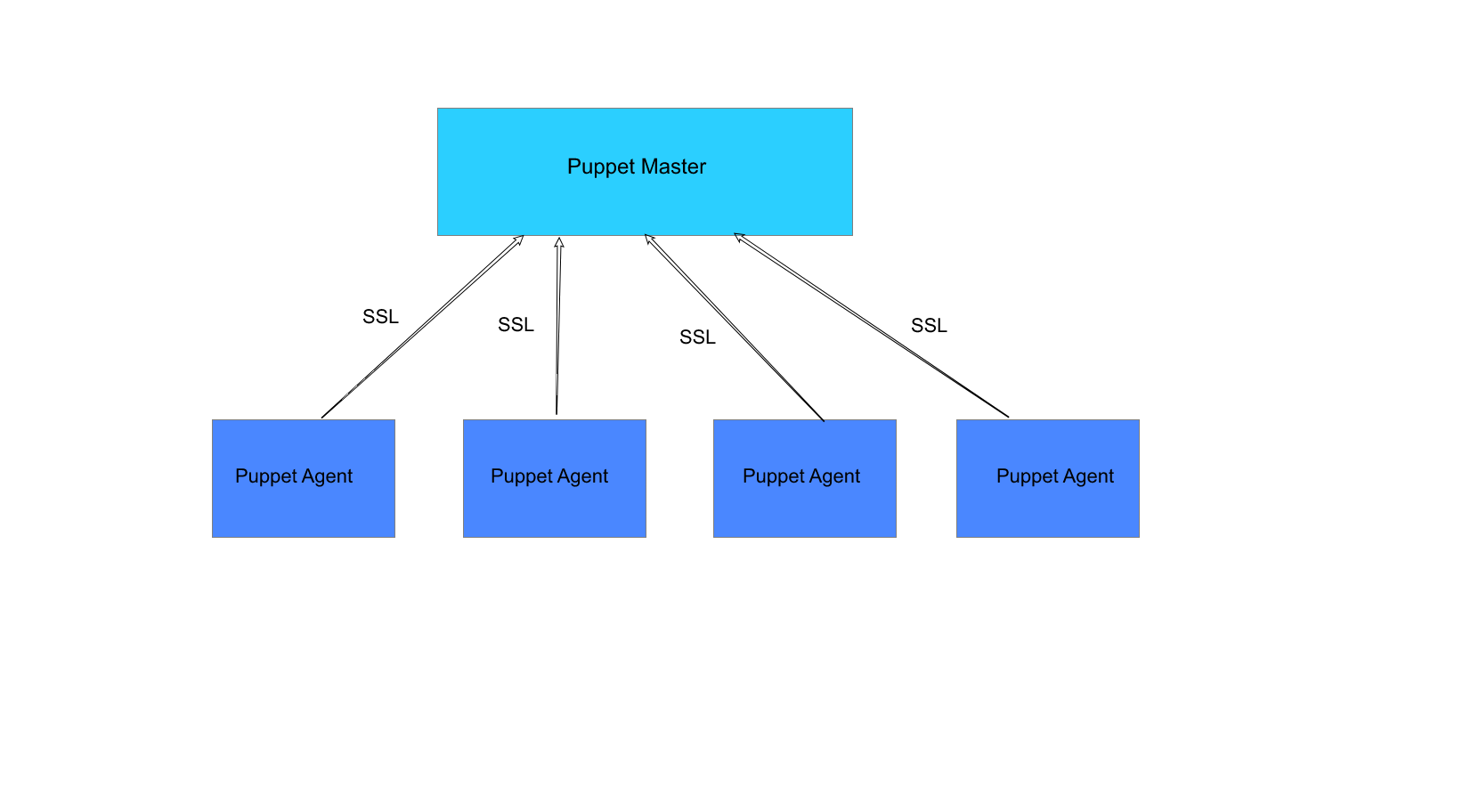

Puppet uses a client-server architecture, where there are one or more Puppet masters (servers), and the systems that are to be configured using Puppet run Puppet agents (clients).

To understand an application's performance and scalability, we need to stress the Puppet masters with high load from the agents running on various systems.

To do this, we can install puppet-master on one system, then run multiple containers that are each running our operating system, over which we install and run puppet-agent.

Next, we need to configure the Puppet agents to interact with the Puppet master to manage the system configuration. This stresses the server when it handles the request and stresses the client when it updates the software configuration.

So, how did the containers help here? Couldn't we have just simulated the load on the Puppet master through a script?

The answer is no. It might have simulated the load, but we would have gotten a highly unrealistic view of its performance.

The reason for this is quite simple. In real life, a user system will run a number of other processes besides puppet-agent or puppet-master, where each process consumes a certain amount of system resources and hence directly impacts the performance of the puppet by limiting the resources Puppet can use.

This was a simple example, but the performance and scale engineering of enterprise applications can get really challenging when dealing with products that combine more than a handful of components. This is where containers shine.

Why containers and not something else?

A genuine question is: Why use containers and not virtual machines (VMs) or just bare-metal machines?

The logic behind running containers is related to the number of container images of a system can we launch, as well as their cost versus the alternatives.

Although VMs provide a powerful mechanism, they also incur a lot of overhead on system resources, thereby limiting the number of systems that can be replicated on a single bare-metal server. By contrast, it is fairly easy to launch even 1,000 containers on the same system, depending on what kind of simulation you are trying to achieve, while keeping the resource overhead low.

With bare-metal servers, the performance and scale can be as realistic as needed, but a major problem is cost overhead. Will you buy 1,000 servers for performance and scale experiments?

That's why containers overall provide an economical and scalable way of testing products' performance against a real-life scenario while keeping resources, overhead, and costs in check.

Learn more in Saurabh Badhwar's talk Testing Software Performance and Scalability Using Containers at Open Source Summit in Los Angeles.

Comments are closed.