We are your typical geeks… always looking for a new hobby project to keep our skills sharp, pass time, and hopefully one day create a project that will make us rich. OK, the last part hasn't happened yet, but someday… The rest of it has been just having fun working on some interesting projects together. Usually at night after our kids are in bed, we meet in one of our garages and just code.

A while ago, we heard about Donkey Car, a self-driving robotic car and thought, "Wow. That's cool. Wonder if we can do that?" We were even more fired up when we discovered that the Donkey Car project is Python-based, and we are over on the Java side. So, enter GalecinoCar, our Java and Groovy port of the Python-based Donkey Car project. (Mad props to the Donkey Car community, BTW!)

Setting up the hardware

The project is built on the Exceed Magnet truck chassis. A Raspberry Pi provides the computing power, and a connected Raspberry Pi camera enables optical input. A PCA 9685 is the middleman between the chassis and the Pi to provide the pulse-width modulation. The car's only other major component is a 3D printed roll cage that attaches to the car and enables mounting of the Pi and the camera. We must admit we were too lazy to design our own mounting for the chassis, so we just used Donkey Car's roll-cage designs.

The hardware was easy enough to wrangle together with one minor exception: The car chassis was pretty hard to find. The Exceed Magnet truck is several years old, and the popularity of Donkey Car made it rather difficult to find. It took us about two months to find two of these vehicles.

Training the autopilot

After building the car and taking care of the software, training the autopilot was a total adventure. It took several tries to create the training data and then train the car's autopilot. At times, it felt like trying to teach a teenager to drive for the first time.

"No, don't go that way!"

"Step on the gas!"

"Don't hit that cat!"

The first time we got the car to self-drive was amazing. We were in another room watching the feed from the car's camera on a wall projector. Nervously, we counted down until the car was set loose. What followed was 30 seconds of intense excitement, fear, and chaos. When the autopilot started, the car took off at full speed and crashed into three walls before it overturned and stayed stationary. Our first look at the car showed that it had broken a drive train, and there were little marks in the drywall where it crashed. Watching the entire thing unfold through the onboard camera was wild!

We found the best way to train the car is with an old PS3 controller, which easily pairs to the car via Bluetooth.

You can control the car with several other options available from the software on the Pi; however, we found that latency between the user inputting a command (e.g., turn right) took a while and produced some pretty crappy autopilot models. The PS3 controller option had low latency and gave us better control over the car, which, in turn, gave us a better autopilot model.

Coding the software

We are using Micronaut software to supply REST endpoints for the remote-control user interface (UI) and ability to control the car's throttle and steering. The user can select manual control or autopilot using Tensorflow/Keras Donkeycar.

Micronaut is a modern, Java virtual machine (JVM)-based, full-stack framework for building modular, easily testable microservice applications. It features built-in dependency injection, auto configuration, configuration sharing, HTTP routing, fast configuration, and load balancing. It has a fast startup time (around one second on a well-equipped development machine) and supports Java, Groovy, and Kotlin. It has support for distributed tracing with third-party tools like Zipkin, includes a nice command-line interface (CLI) for generating code, and offers a console for profile support for creating various types of projects.

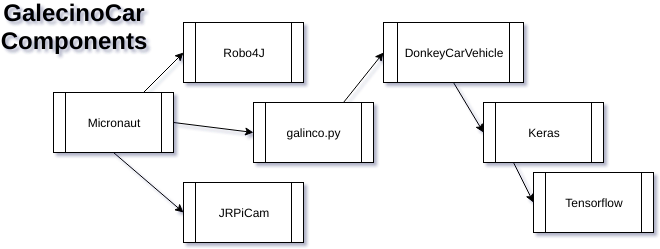

Inside the Micronaut application, the Robo4J library controls the throttle and steering while under manual control. For Micronaut's interface to the camera we are using the jrpicam library. See the diagram below to see how all of the pieces fit together:

Here's how some of the code works to tie everything together:

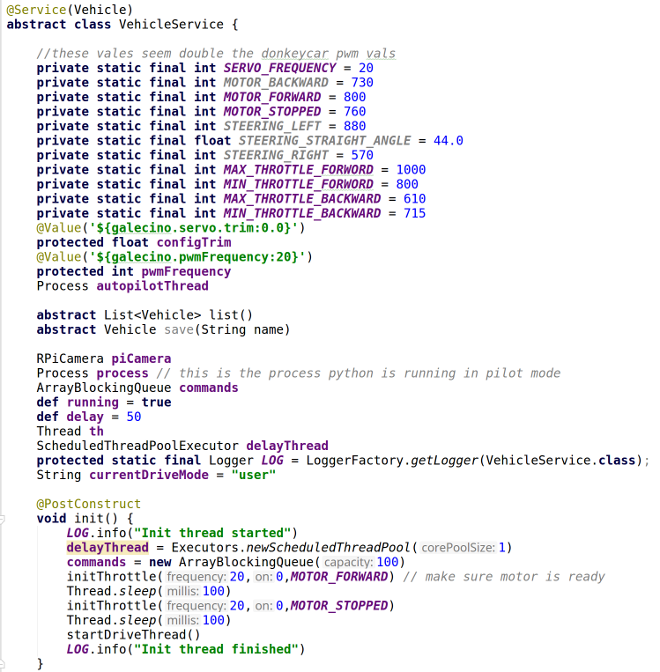

In the code above, Micronaut creates a service—in this case, a GORM Data Service; if we didn't want database support, we could have used a @Singleton for a simpler service. There are several things going on here, starting with an initialization routine. (You can add @PostContruct to any method that will run when the class is created. If you add the tag to multiple methods, they will run in the order declared.) Because the server is bombarded with requests, we use a queuing mechanism to discard all but the latest commands sent to the car.

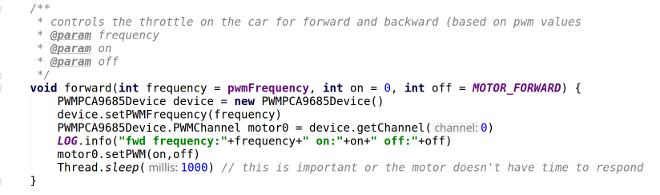

This code shows how we move the car forward or backward based on pulse-width modulation (PWM) values sent to the motor.

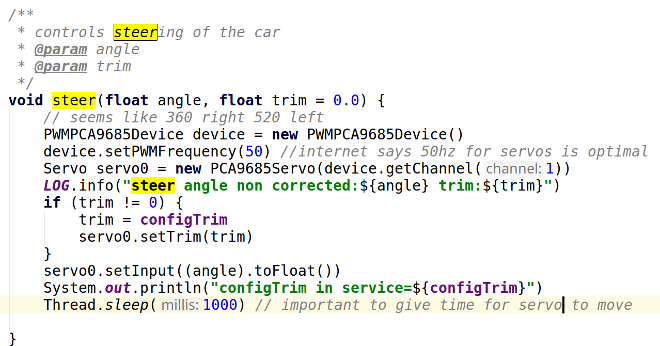

This code shows how the Robo4J library controls steering. Robo4J is a Java library, written by Marcus Hirt and Miro Wengner, to control robots with Java Native Interface (JNI) support to help Java developers control robotics on the Raspberry Pi.

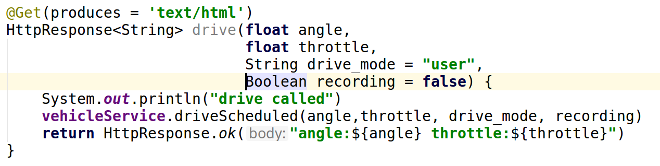

Also in this code, the Micronaut controller provides the endpoint to the UI for controlling the car. You can control the method and content type with the @Get tag, and simple listing parameters for the method define what GET parameters the code will be using. If you want JSON returned instead, just change the produces parameter to text/json.

You can see the code for the GalecinoCar project in our GitHub repository.

Watching GalecinoCar in action

GalecinoCar's public debut was at GR8Conf EU 2018, where we gave a presentation about the project and demonstrated its ability to drive.

Image from GalecinoCar looking at the track at GR8Conf EU 2018.

Here is a clip from GR8Conf EU 2018 of the car doing its thing:

Looking into the future

We've got a lot of great ideas for this project. The most important thing is to get the AI portion working better and have the AI start to think more like a person behind the wheel of a car. First, we want the car to be able to make better decisions when driving, particularly in the area of object avoidance, so we plan to use machine learning, image recognition, and a LIDAR system to add features for identifying objects.

We want the autopilot to not only be able to identify how far away an object is but also determine what the object is and if there are special ways to respond to it. For instance, the car's steering-avoidance response should be vastly different depending on whether the thing it is trying to avoid is a person or a fallen tree limb. Unfortunately we're limited by the computing power of a Pi, but maybe someday we'll get a CUDA core rig on there.

Car-to-car communication is another fun problem we plan on tackling. If we put two cars on a track at the same time, they should be able to negotiate with each other for passing. Perhaps we will even have to build in learning on traffic protocols and laws.

We have no intentions of scaling up the car to full size. That rules out several technologies we could use. For instance, a 1/16th scale car wouldn't have much use for GPS data.

GalecinoCar has been an incredibly fun project to work on, and it's definitely one of our more challenging hobby projects. However, having laid a solid foundation, we expect that the project will start to grow at an accelerated rate. There is so much potential for what we can do with this vehicle, and so much we can train an AI to do with self-guiding a car, so this project will be going on for a while.

Ryan Vanderwerf and Lee Fox will present GalecinoCar: A self-driving car using machine learning, microservices, Java, and Groovy at the 20th annual OSCON event, July 16-19 in Portland, Ore.

Comments are closed.