When people talk about diversity and inclusion in open source, the discussion is usually about how to improve a project culture's inclusivity. But can the software itself be gender biased? Our research says it can. So, how do you know if your software is biased? And, if it is, how can you make it more inclusive?

The GenderMag method is a way to identify gender-inclusiveness problems in your software. It is available in a "kit" freely available for download at GenderMag.org.

The method was developed by Oregon State University distinguished professor Margaret Burnett, whose internationally recognized work with students and collaborators has shown gender differences in how people problem-solve with software—from people who are working with Excel formulas to professional programmers.

She was inspired to design GenderMag by a software product manager who asked her for help with his company's application for medical practitioners to program medical devices for patients' needs. His customer base was mostly women, and unfortunately, many women did not like the software. With an all-male development team, the manager was at a loss for what to do.

In this article, I will share reasons for gender biases in software and then describe what these biases mean for open source tools.

How does gender bias sneak into software?

Individual differences in how people problem-solve and use software features often cluster by gender; that is, certain problem-solving styles are more favored by men than by women (and vice versa). Software tools often support the tool developers' preferred style of problem-solving. When those tools are developed by male-dominated teams, they can inadvertently create gender bias.

Research over the past 10 years across numerous populations has identified the following five problem-solving facets that impact how individuals use software:

- Motivations for using the software

- Style of processing information

- Computer self-efficacy

- Attitudes toward technological risks

- Preferred styles for learning technology

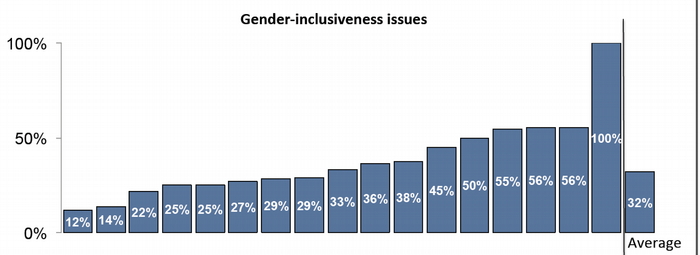

The GenderMag method has identified inclusivity issues in real-world software teams. As the following chart shows, 17 different software teams across different domains have found gender biases in their own software when using GenderMag.

Percentage of software features with embedded gender biases.

How does GenderMag work?

The GenderMag method consists of a gender-specific cognitive walkthrough with a set of personas. Each persona represents a subset of a system's target users as they relate to the five problem-solving facets listed above. Tool designers perform the GenderMag walkthrough to identify potential usability issues for new users to a program or feature.

In a GenderMag walkthrough, tool designers answer three questions through the lens of a specific persona's problem-solving facets—one question about each subgoal in a detailed use case and two questions about each interface action.

To explain, let's look at a walkthrough using the Abby Jones persona:

Subgoal Q: Will Abby Jones have formed this subgoal as a step to her overall goal? (Yes/no/maybe, why, what facets did you use)

Action Q1: Will Abby Jones know what to do in the user interface at this step? (Yes/no/maybe, why, what facets did you use)

Action Q2: If Abby Jones does the right thing, will she know she did the right thing and is she making progress toward her goal? (Yes/no/maybe, why, what facets did you use).

If your answer to any of the above questions is no or maybe, you might have an inclusivity bug if it is also associated with one of the five facets.

Where are the biases in open source?

Our research shows that open source software would benefit from considering these individual differences in problem-solving styles in software design, as they might be contributing to open source communities' low diversity rates. In a recent field study, five open source teams used the GenderMag method to analyze open source tools in a code-hosting site, an issue tracker, and project documentation.

Using the GenderMag cognitive walkthrough, the open source teams identified gender bias in more than 70% of the tool issues they uncovered.

For example, one frequent problem showing gender bias is the fragmented way issues and their associated information are recorded in GitHub. The teams' analysis revealed that information fragmentation would disproportionately affect individuals with a comprehensive information processing style (i.e., getting a good understanding of the problem by gathering the pertinent information about it before proceeding with a solution). This fragmentation problem has gender bias because comprehensive information processing is statistically more prevalent among women than men. Even so, solving the fragmentation problem would help everyone who prefers comprehensive information processing, regardless of their gender.

Another problem the teams found was related to individual differences in learning style. When information was spread across the project site, with many actions for a newcomer to consider (e.g., clone, fork, different pull request options, finding issues), the teams showed that newcomers who like to learn by tinkering were likely to become disoriented. This problem also has gender biases but they disproportionately affect men because learning by tinkering is statistically more prevalent among men than women. Here again, although this problem affects one gender more than others, solving it would help everyone who prefers to learn by tinkering.

In these examples, the tools and technology were biased against people with problem-solving styles favored by women in one case, and against styles favored by men in the other case. In total, however, most of the technology-embedded problems the open source software teams in our study found were biased against problem-solving styles favored by women.

A subsequent study of newcomers' experiences with open source showed that these teams' findings were correct. The gender biases identified by the open source teams in our previous study matched the problems the newcomers in the second study reported in their diaries. Over the course of several months, these newcomers recorded the problems they faced with the tools and technology as they worked toward making their first contribution to an open source project. The newcomers' diaries showed statistically significant gender differences in how their problem-solving facets interacted with the barriers they encountered when they tried to participate.

What can you do?

You can help by using the freely available GenderMag method to find and then fix inclusivity bugs in the software you're building. You can also contribute to the GenderMag Recorder's Assistant, an emerging open source tool that aims to make the GenderMag process easier. If you're interested in partnering on other ways to help address gender biases in software, please contact us via the project website.

Anita Sarma will present Is the Software Itself Gender-Biased? OSS Tools and Gender Inclusivity at the Open Source Summit North America conference, July 21-31 in Vancouver, British Columbia.

3 Comments