A faster startup and smaller memory footprint always matter in Kubernetes due to the expense of running thousands of application pods and the cost savings of doing it with fewer worker nodes and other resources. Memory is more important than throughput on containerized microservices on Kubernetes because:

- It's more expensive due to permanence (unlike CPU cycles)

- Microservices multiply the overhead cost

- One monolith application becomes N microservices (e.g., 20 microservices ≈ 20GB)

This significantly impacts serverless function development and the Java deployment model. This is because many enterprise developers chose alternatives such as Go, Python, and Nodejs to overcome the performance bottleneck—until now, thanks to Quarkus, a new Kubernetes-native Java stack. This article explains how to optimize Java performance to run serverless functions on Kubernetes using Quarkus.

Container-first design

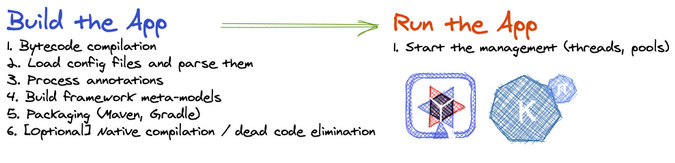

Traditional frameworks in the Java ecosystem come at a cost in terms of the memory and startup time required to initialize those frameworks, including configuration processing, classpath scanning, class loading, annotation processing, and building a metamodel of the world, which the framework requires to operate. This is multiplied over and over for different frameworks.

Quarkus helps fix these Java performance issues by "shifting left" almost all of the overhead to the build phase. By doing code and framework analysis, bytecode transformation, and dynamic metamodel generation only once, at build time, you end up with a highly optimized runtime executable that starts up super fast and doesn't require all the memory of a traditional startup because the work is done once, in the build phase.

(Daniel Oh, CC BY-SA 4.0)

More importantly, Quarkus allows you to build a native executable file that provides performance advantages, including amazingly fast boot time and incredibly small resident set size (RSS) memory, for instant scale-up and high-density memory utilization compared to the traditional cloud-native Java stack.

(Daniel Oh, CC BY-SA 4.0)

Here is a quick example of how you can build the native executable with a Java serverless function project using Quarkus.

1. Create the Quarkus serverless Maven project

This command generates a Quarkus project (e.g., quarkus-serverless-native) to create a simple function:

$ mvn io.quarkus:quarkus-maven-plugin:1.13.4.Final:create \

-DprojectGroupId=org.acme \

-DprojectArtifactId=quarkus-serverless-native \

-DclassName="org.acme.getting.started.GreetingResource"2. Build a native executable

You need a GraalVM to build a native executable for the Java application. You can choose any GraalVM distribution, such as Oracle GraalVM Community Edition (CE) and Mandrel (the downstream distribution of Oracle GraalVM CE). Mandrel is designed to support building Quarkus-native executables on OpenJDK 11.

Open pom.xml, and you will find this native profile. You'll use it to build a native executable:

<profiles>

<profile>

<id>native</id>

<properties>

<quarkus.package.type>native</quarkus.package.type>

</properties>

</profile>

</profiles>Note: You can install the GraalVM or Mandrel distribution locally. You can also download the Mandrel container image to build it (as I did), so you need to run a container engine (e.g., Docker) locally.

Assuming you have started your container runtime already, run one of the following Maven commands.

For Docker:

$ ./mvnw package -Pnative \

-Dquarkus.native.container-build=true \

-Dquarkus.native.container-runtime=dockerFor Podman:

$ ./mvnw package -Pnative \

-Dquarkus.native.container-build=true \

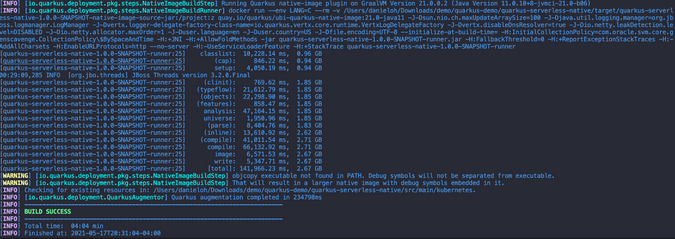

-Dquarkus.native.container-runtime=podmanThe output should end with BUILD SUCCESS.

(Daniel Oh, CC BY-SA 4.0)

Run the native executable directly without Java Virtual Machine (JVM):

$ target/quarkus-serverless-native-1.0.0-SNAPSHOT-runnerThe output will look like:

__ ____ __ _____ ___ __ ____ ______

--/ __ \/ / / / _ | / _ \/ //_/ / / / __/

-/ /_/ / /_/ / __ |/ , _/ ,< / /_/ /\ \

--\___\_\____/_/ |_/_/|_/_/|_|\____/___/

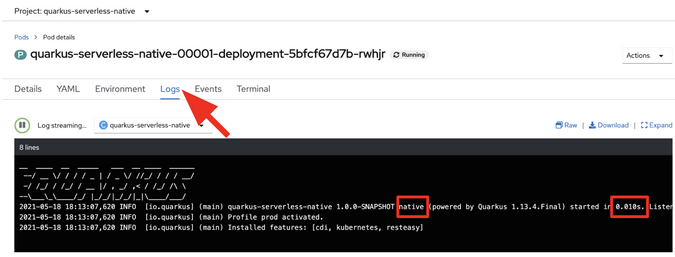

INFO [io.quarkus] (main) quarkus-serverless-native 1.0.0-SNAPSHOT native

(powered by Quarkus xx.xx.xx.) Started in 0.019s. Listening on: https://0.0.0.0:8080

INFO [io.quarkus] (main) Profile prod activated.

INFO [io.quarkus] (main) Installed features: [cdi, kubernetes, resteasy]Supersonic! That's 19 milliseconds to startup. The time might be different in your environment.

It also has extremely low memory usage, as the Linux ps utility reports. While the app is running, run this command in another terminal:

$ ps -o pid,rss,command -p $(pgrep -f runner)You should see something like:

PID RSS COMMAND

10246 11360 target/quarkus-serverless-native-1.0.0-SNAPSHOT-runnerThis process is using around 11MB of memory (RSS). Pretty compact!

Note: The RSS and memory usage of any app, including Quarkus, will vary depending on your specific environment and will rise as application experiences load.

You can also access the function with a REST API. Then the output should be Hello RESTEasy:

$ curl localhost:8080/hello

Hello RESTEasy3. Deploy the functions to Knative service

If you haven't already, create a namespace (e.g., quarkus-serverless-native) on OKD (OpenShift Kubernetes Distribution) to deploy this native executable as a serverless function. Then add a quarkus-openshift extension for Knative service deployment:

$ ./mvnw -q quarkus:add-extension -Dextensions="openshift"Append the following variables in src/main/resources/application.properties to configure Knative and Kubernetes resources:

quarkus.container-image.group=quarkus-serverless-native

quarkus.container-image.registry=image-registry.openshift-image-registry.svc:5000

quarkus.native.container-build=true

quarkus.kubernetes-client.trust-certs=true

quarkus.kubernetes.deployment-target=knative

quarkus.kubernetes.deploy=true

quarkus.openshift.build-strategy=dockerBuild the native executable, then deploy it to the OKD cluster directly:

$ ./mvnw clean package -PnativeNote: Make sure to log in to the right project (e.g.,

quarkus-serverless-native) using theoc logincommand ahead of time.

The output should end with BUILD SUCCESS. It will take a few minutes to complete a native binary build and deploy a new Knative service. After successfully creating the service, you should see a Knative service (KSVC) and revision (REV) using either the kubectl or oc command tool:

$ kubectl get ksvc

NAME URL [...]

quarkus-serverless-native https://quarkus-serverless-native-[...].SUBDOMAIN True

$ kubectl get rev

NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON

quarkus-serverless-native-00001 quarkus-serverless-native quarkus-serverless-native-00001 1 True4. Access the native executable function

Retrieve the serverless function's endpoint by running this kubectl command:

$ kubectl get rt/quarkus-serverless-nativeThe output should look like:

NAME URL READY REASON

quarkus-serverless-native https://quarkus-serverless-restapi-quarkus-serverless-native.SUBDOMAIN True Access the route URL with a curl command:

$ curl https://quarkus-serverless-restapi-quarkus-serverless-native.SUBDOMAIN/helloIn less than one second, you will get the same result as you got locally:

Hello RESTEasyWhen you access the Quarkus running pod's logs in the OKD cluster, you will see the native executable is running as the Knative service.

(Daniel Oh, CC BY-SA 4.0)

What's next?

You can optimize Java serverless functions with GraalVM distributions to deploy them as serverless functions on Knative with Kubernetes. Quarkus enables this performance optimization using simple configurations in normal microservices.

The next article in this series will guide you on making portable functions across multiple serverless platforms with no code changes.

Comments are closed.