The Graphics and Visualization (GVIS) lab at NASA's Glenn Research Center in Cleveland, Ohio specializes in creating scientific visualizations and virtual reality programs for scientists at Glenn and beyond. I am thrilled to be a member of the small army of interns in the GVIS lab. So are Carolyn Holthouse, Joe Porter, and Jason Boccuti, interns from the Air Force Research Laboratory (AFRL) and Wright Brothers Institute's Discovery Lab who are working remotely at NASA Glenn. Their project involves robots, open source software, and virtual reality. I caught up with Carolyn, Jason, and Joe to talk about their project.

What is your project?

The goal of our project is to provide a platform that will enhance collaboration between the AFRL and NASA Glenn. Our project blurs the line between the physical and virtual worlds through the use of immersive telepresence and augmented and virtual reality technology. We are building a virtual lab space to allow local and remote users to share data and participate virtually and/or through telepresence systems on research projects. We are using the SLOPE lab at NASA Glenn as a pilot facility to demonstrate the capabilities of OpenSim, the open source virtual reality software that we are using. Anything that happens in our virtual environment will be replicated physically and vice versa. However, the system we develop will be easily generalized to suit any lab space and collaborative needs.

Why virtual reality?

Virtual reality is becoming a huge area of interest. For our project, it provides accessibility to all kinds of users. For instance, our virtual lab space will be used in the AFRL's Virtual Science and Engineering Festival to educate middle and high school age students about how rovers are designed and tested for space missions. Virtual reality will increase the accessibility of STEM opportunities to regions that currently don't have direct access. Also, studies have shown that humans learn better through immersion in an environment and the technologies we are developing will provide a more immersive, realistic feel for remote users.

The AFRL is very interested in pursuing OpenSim, open source software based on the video game Second Life. Many of the projects running out of the Discovery Lab this summer heavily involve some aspect of Second Life and testing the limits of the software. One of the teams this summer is working on a project requested by Dayton's Air Camp to create a virtual Air Camp that will be more widely accessible to kids of all ages.

What will be the benefits of your project?

The primary beneficiaries of our project are the AFRL and NASA Glenn, as the project is intended to be the start of a long-term collaboration between the two facilities. We also hope to provide a more engaging way to show to the public, especially young people, how research is done. Furthermore, the system developed in our project will improve the quality of research being done by reducing errors due to misunderstandings caused by clumsy ways of sharing data.

How does your project work?

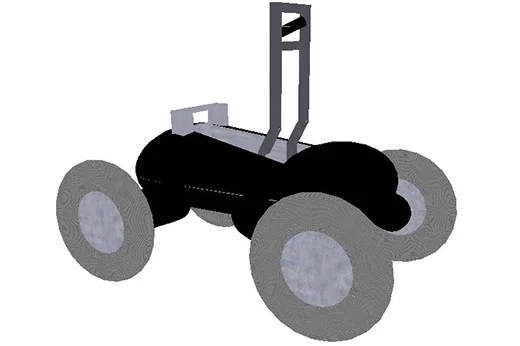

Our project utilizes a few major tools: OpenSim, ThingSpeak, and Java/Swing. In OpenSim we have created a virtual laboratory on AFRL Discovery Lab's grid and modeled some of the different rovers that exist at NASA Glenn Research Center's SLOPE Lab. These rovers are drivable in the virtual world and they track characteristic information about themselves such as their location, velocity, power used, etc. When being driven, the robots connect to a ThingSpeak (which is also Open Source) server to upload their characteristic information in simulated realtime. We just created a Java Swing application that users can control movement to a physical robot over a WiFi connection using their keyboard's arrow keys. Currently we are working on connecting this application to the ThingSpeak server so it can upload the characteristic information from the physical robot. Once completed, both the physical and virtual robots will not only upload their characteristic data while being driven but also check the server for changes in the other's information. This way they can update to each other—if someone moves the physical robot, the virtual robot will simulate similar movement and if someone moves the virtual robot in the lab, the physical robot move to match the state of the virtual robot.

A SLOPE Lab Scarab rover.

A virtual reality Scarab rover.

How has open source helped you with your work?

Open source has been an extremely useful aspect of the software tools we are using. With OpenSim, we have access to the many valuable already existing tools and libraries built into the framework, however we have access to completely modify aspects of the virtual world through the admins at Discovery Lab. Similarly, ThingSpeak has made hosting sensor data significantly easier than other non-open source alternatives. While not only offering a free web server for hosting sensor data, ThingSpeak also provides the capability for users to install the platform on their own server so that they can have their own instance and host their data internally. All in all, it's been great having access to the great freedoms that open source allows and also their great communities.

How are you testing your project?

We currently have a virtual lab space modeled after the SLOPE lab.

The SLOPE lab.

Virtual reality SLOPE lab.

Jason has been working on getting an app on his computer to communicate with a physical robot. Joe and Carolyn have been developing virtual robots and experimenting with ways to enhance the user experience in the virtual lab. We have been able to connect an Oculus Rift virtual reality headset to the OpenSim viewer that we use which allows the user to get a fully immersive experience in the virtual lab.

VR headset testing

We also have a Microsoft Kinect sensor which takes input from the user's body positions and moves the avatar accordingly. For example, stepping forward causes the avatar to walk forward, and raising one's arms causes the avatar to fly upward. Finally, we have an Xbox 360 controller that gives the user the ability to click on objects while they are standing away from their computer and using the Oculus or Kinect systems. Eventually, we will be running tests with students who are working at the Discovery Lab by having them enter our virtual lab space and drive the test rovers, which will in turn move the physical robots we have on site here at NASA.

Science

A collection of articles on the topic of open source software, tools, hardware, philosophies, and more in science.

Comments are closed.