Linux containers have changed the way we run, build, and manage applications. As more and more platforms become cloud-native, containers are playing a more important role in every enterprise's infrastructure. Kubernetes (K8s) is currently the most well-known solution for managing containers, whether they run in a private, public, or hybrid cloud.

With a container application platform, we can dynamically create a whole environment to run a task and discard it afterward. In an earlier post, we covered how to use Jenkins to run builds and unit tests in containers. Before reading further, I recommend taking a look at that post so you're familiar with the basic principles of the solution.

Now let's look at how to run integration tests by starting multiple containers to provide a whole test environment.

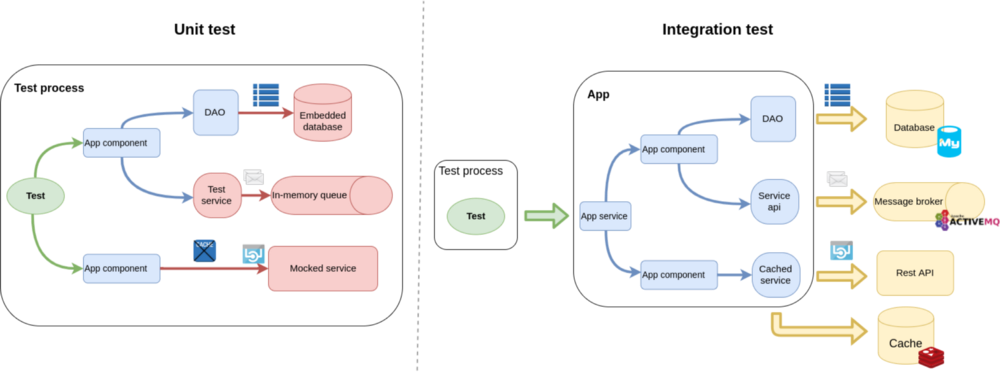

Let's assume we have a backend application that depends on other services, such as databases, message brokers, or web services. During unit testing, we try to use embedded solutions or simply mock-up these endpoints to make sure no network connections are required. This requires changes in our code for the scope of the test.

The purpose of the integration test is to verify how the application behaves with other parts of the solution stack. Providing a service depends on more than just our codebase. The overall solution is a mix of modules (e.g., databases with stored procedures, message brokers, or distributed cache with server-side scripts) that must be wired together the right way to provide the expected functionality. This can only be tested by running all these parts next to each other and not enabling "test mode" within our application.

Whether "unit test" and "integration test" are the right terms in this case is debatable. For simplicity's sake, I'll call tests that run within one process without any external dependencies "unit tests" and the ones running the app in production mode making network connections "integration tests."

Maintaining a static environment for such tests can be troublesome and a waste of resources; this is where the ephemeral nature of dynamic containers comes in handy.

The codebase for this post can be found in my kubernetes-integration-test GitHub repository. It contains an example Red Hat Fuse 7 application (/app-users) that takes messages from AMQ, queries data from a MariaDB, and calls a REST API. The repo also contains the integration test project (/integration-test) and the different Jenkinsfiles explained in this post.

Here are the software versions used in this tutorial:

- Red Hat Container Development Kit (CDK) v3.4

- OpenShift v3.9

- Kubernetes v1.9

- Jenkins images v3.9

- Jenkins kubernetes-plugin v1.7

A fresh start every time

We want to achieve the following goals with our integration test:

- start the production-ready package of our app under test,

- start an instance of all the dependency systems required,

- run tests that interact only via the public service endpoints with the app,

- ensure that nothing persists between executions, so we don't have to worry about restoring the initial state, and

- allocate resources only during test execution.

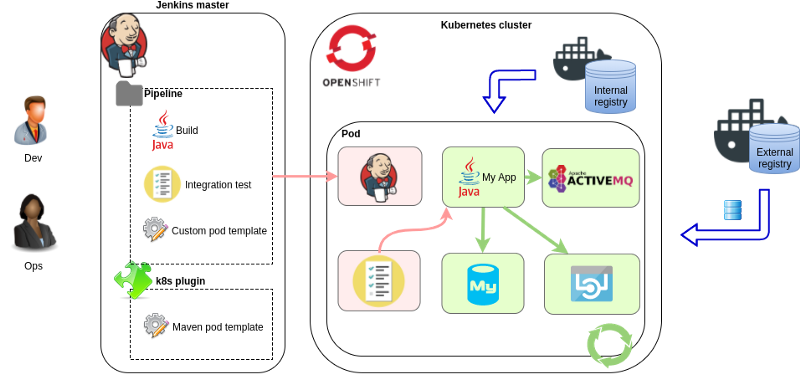

The solution is based on Jenkins and the jenkins-kubernetes-plugin. Jenkins can run tasks on different agent nodes, while the plugin makes it possible to create these nodes dynamically on Kubernetes. An agent node is created only for the task execution and is deleted afterward.

We need to define the agent node pod template first. The jenkins-master image for OpenShift comes with predefined podTemplates for Maven and NodeJS builds, and admins can add such "static" pod templates to the plugin configuration.

Fortunately, defining the pod template for our agent node directly in our project is possible if we use a Jenkins pipeline. This is obviously a more flexible way, as the whole execution environment can be maintained in code by the development team. Let's see an example:

podTemplate(

label: 'app-users-it',

cloud: 'openshift', //This needs to match the cloud name in jenkins-kubernetes-plugin config

containers: [

//Jenkins agent. Also executes the integration test. Having a 'jnlp' container is mandatory.

containerTemplate(name: 'jnlp',

image: 'registry.access.redhat.com/openshift3/jenkins-slave-maven-rhel7:v3.9',

resourceLimitMemory: '512Mi',

args: '${computer.jnlpmac} ${computer.name}',

envVars: [

//Heap for mvn and surefire process is 1/4 of resourceLimitMemory by default

envVar(key: 'JNLP_MAX_HEAP_UPPER_BOUND_MB', value: '64')

]),

//App under test

containerTemplate(name: 'app-users',

image: '172.30.1.1:5000/myproject/app-users:latest',

resourceLimitMemory: '512Mi',

envVars: [

envVar(key: 'SPRING_PROFILES_ACTIVE', value: 'k8sit'),

envVar(key: 'SPRING_CLOUD_KUBERNETES_ENABLED', value: 'false')

]),

//DB

containerTemplate(name: 'mariadb',

image: 'registry.access.redhat.com/rhscl/mariadb-102-rhel7:1',

resourceLimitMemory: '256Mi',

envVars: [

envVar(key: 'MYSQL_USER', value: 'myuser'),

envVar(key: 'MYSQL_PASSWORD', value: 'mypassword'),

envVar(key: 'MYSQL_DATABASE', value: 'testdb'),

envVar(key: 'MYSQL_ROOT_PASSWORD', value: 'secret')

]),

//AMQ

containerTemplate(name: 'amq',

image: 'registry.access.redhat.com/jboss-amq-6/amq63-openshift:1.3',

resourceLimitMemory: '256Mi',

envVars: [

envVar(key: 'AMQ_USER', value: 'test'),

envVar(key: 'AMQ_PASSWORD', value: 'secret')

]),

//External Rest API (provided by mockserver)

containerTemplate(name: 'mockserver',

image: 'jamesdbloom/mockserver:mockserver-5.3.0',

resourceLimitMemory: '256Mi',

envVars: [

envVar(key: 'LOG_LEVEL', value: 'INFO'),

envVar(key: 'JVM_OPTIONS', value: '-Xmx128m'),

])

]

)

{

node('app-users-it') {

/* Run the steps:

* - pull source

* - prepare dependency systems, run sqls

* - run integration test

* ...

*/

}

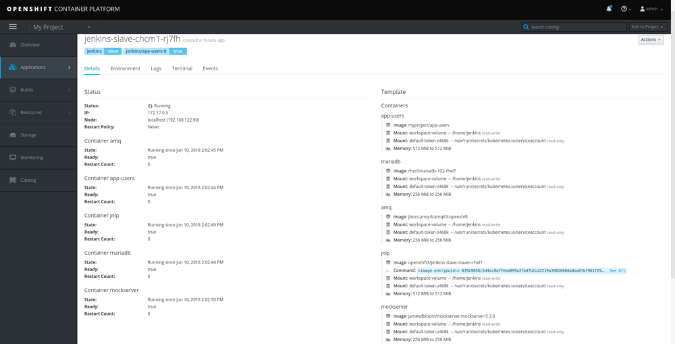

}This pipeline will create all the containers, pulling the given Docker images running them within the same pod. This means that the containers will share the localhost interface, so the services can access each other's ports (but we have to think about port-binding collisions). This is how the running pod looks in OpenShift web console:

The images are set by their Docker URL (OpenShift image streams are not supported here), so the cluster must access those registries. In the example above, we previously built the image of our app within the same Kubernetes cluster and are now pulling it from the internal registry: 172.30.1.1 (docker-registry.default.svc). This image is our release package that may be deployed to a dev, test, or prod environment. It's started with a k8sit application properties profile where the connection URLs point to 127.0.0.1.

Thinking about memory usage for containers running Java processes is important. Current versions of Java (v1.8, v1.9) ignore the container memory limit by default and set a much higher heap size. Version 3.9 jenkins-slave images supports memory limits via environment variables much better than earlier versions. Setting JNLP_MAX_HEAP_UPPER_BOUND_MB=64 was enough for us to run Maven tasks with a 512MiB limit.

All containers within the pod have a shared empty dir volume mounted at /home/jenkins (default workingDir). This is used by the Jenkins agent to run pipeline step scripts within the container, and this is where we check out our integration test repository. This is also the current directory where the steps are executed unless they are within a dir('relative_dir') block. Here are the pipeline steps for the example above:

podTemplate(...)

{

node('app-users-it') { //must match the label in the podTemplate

stage('Pull source') {

checkout scm // pull the git repo of the Jenkinsfile

//or: git url: 'https://github.com/bszeti/kubernetes-integration-test.git'

}

dir ("integration-test") { //In this example the integration test project is in a sub directory

stage('Prepare test') {

container('mariadb') {

//requires mysql tool

sh 'sql/setup.sh'

}

//requires curl and python

sh 'mockserver/setup.sh'

}

//These env vars are used by the tests to send message to users.in queue

withEnv(['AMQ_USER=test',

'AMQ_PASSWORD=secret']) {

stage('Build and run test') {

try {

//Execute the integration test

sh 'mvn -s ../configuration/settings.xml -B clean test'

} finally {

//Save test results in Jenkins

junit 'target/surefire-reports/*.xml'

}

}

}

}

}

}The pipeline steps are run on the jnlp container unless they are within a container('container_name') block:

- First, we check out the source of the integration project. In this case, it's in the

integration-testsubdirectory within the repo. - The

sql/setup.shscript creates tables and loads test data in the database. It requires themysqltool, so it must be run in themariadbcontainer. - Our application (

app-users) calls a Rest API. We have no image to start this service, so we use MockServer to bring up the HTTP endpoint. It's configured by themockserver/setup.sh. - The integration tests are written in Java with JUnit and executed by Maven. It could be anything else—this is simply the stack we're familiar with.

There are plenty of configuration parameters for podTemplate and containerTemplate following the Kubernetes resource API, with a few differences. Environment variables, for example, can be defined at the container level as well as the pod level. Volumes can be added to the pod, but they are mounted on each container at the same mountPath:

podTemplate(...

containers: [...],

volumes:[

configMapVolume(mountPath: '/etc/myconfig',

configMapName: 'my-settings'),

persistentVolumeClaim(mountPath: '/home/jenkins/myvolume',

claimName:'myclaim')

],

envVars: [

envVar(key: 'ENV_NAME', value: 'my-k8sit')

]

)Sounds easy, but…

Running multiple containers in the same pod is a nice way to attach them, but there is an issue that we can run into if our containers have entry points with different user IDs. Docker images used to run processes as root, but it's not recommended in production environments due to security concerns, so many images switch to a non-root user. Unfortunately, different images may use a different uid (USER in a Dockerfile) that can cause file-permission issues if they use the same volume.

In this case, the source of conflict is the Jenkins workspace on the workingDir volume (/home/jenkins/workspace/). This is used for pipeline execution and saving step outputs within each container. If we have steps in a container(…) block and the uid in this image is different (non-root) than in the jnlp container, we'll get the following error:

touch: cannot touch '/home/jenkins/workspace/k8sit-basic/integration-test@tmp/durable-aa8f5204/jenkins-log.txt': Permission deniedLet's have a look at the USER in images in our example:

- jenkins-slave-maven:

uid1001,guid0 - mariadb:

uid27,guid27 - amq:

uid 185,guid 0 - mockserver:

uid 0,guid 0(requiresanyuid) - fuse-java-openshift:

uid 185,guid 0

The default umask in the jnlp container is 0022, so steps in containers with uid 185 and uid 27 will run into the permission issue. The workaround is to change the default umask in the jnlp container so the workspace is accessible by any uid:

containerTemplate(name: 'jnlp',

image: 'registry.access.redhat.com/openshift3/jenkins-slave-maven-rhel7:v3.9',

resourceLimitMemory: '512Mi',

command: '/bin/sh -c',

//change umask so any uid has permission to the jenkins workspace

args: '"umask 0000; /usr/local/bin/run-jnlp-client ${computer.jnlpmac} ${computer.name}"',

envVars: [

envVar(key: 'JNLP_MAX_HEAP_UPPER_BOUND_MB', value: '64')

])To see the whole Jenkinsfile that first builds the app and the Docker image before running the integration test, go to kubernetes-integration-test/Jenkinsfile.

In these examples, the integration test is run on the jnlp container because we picked Java and Maven for our test project and the jenkins-slave-maven image can execute that. This is, of course, not mandatory; we can use the jenkins-slave-base image as jnlp and have a a separate container to execute the test. See the kubernetes-integration-test/Jenkinsfile-jnlp-base example where we intentionally separate jnlp and use another container for Maven

YAML template

The podTemplate and containerTemplate definitions support many configurations, but they lack a few parameters. For example:

- They can't assign environment variables from ConfigMap, only from Secret.

- They can't set a readiness probe for the containers. Without them, Kubernetes reports the pod is running right after kicking off the containers. Jenkins will start executing the steps before the processes are ready to accept requests. This can lead to failures due to racing conditions. These example pipelines typically work because

checkout scmgives enough time for the containers to start. Of course, a sleep helps, but defining readiness probes is the proper way.

To solve the problem, a YAML parameter was added to the podTemplate() in kubernetes-plugin (v1.5+). It supports a complete Kubernetes pod resource definition, so we can define any configuration for the pod:

podTemplate(

label: 'app-users-it',

cloud: 'openshift',

//yaml configuration inline. It's a yaml so indentation is important.

yaml: '''

apiVersion: v1

kind: Pod

metadata:

labels:

test: app-users

spec:

containers:

#Java agent, test executor

- name: jnlp

image: registry.access.redhat.com/openshift3/jenkins-slave-maven-rhel7:v3.9

command:

- /bin/sh

args:

- -c

#Note the args and syntax for run-jnlp-client

- umask 0000; /usr/local/bin/run-jnlp-client $(JENKINS_SECRET) $(JENKINS_NAME)

resources:

limits:

memory: 512Mi

#App under test

- name: app-users

image: 172.30.1.1:5000/myproject/app-users:latest

...

''',

//volumes for example can be defined in the yaml our as parameter

volumes:[...]

) {...}Make sure to update the Kubernetes plugin in Jenkins to v1.5+, otherwise the YAML parameter will be silently ignored.

YAML definition and other podTemplate parameters are supposed to be merged in a way, but it's less error-prone to use only one or the other. If defining the YAML inline in the pipeline is difficult to read, see kubernetes-integration-test/Jenkinsfile-yaml, which is an example of loading it from a file.

Declarative Pipeline syntax

All the example pipelines above used the Scripted Pipeline syntax, which is practically a Groovy script with pipeline steps. The Declarative Pipeline syntax is a new approach that enforces more structure on the script by providing less flexibility and allowing no "Groovy hacks." It results in cleaner code, but you may have to switch back to the scripted syntax in complex scenarios.

In Declarative Pipelines, the kubernetes-plugin (v1.7+) supports only the YAML definition to define the pod:

pipeline {

agent{

kubernetes {

label 'app-users-it'

cloud 'openshift'

defaultContainer 'jnlp'

yaml '''

apiVersion: v1

kind: Pod

metadata:

labels:

app: app-users

spec:

containers:

#Java agent, test executor

- name: jnlp

image: registry.access.redhat.com/openshift3/jenkins-slave-maven-rhel7:v3.9

command:

- /bin/sh

args:

- -c

- umask 0000; /usr/local/bin/run-jnlp-client $(JENKINS_SECRET) $(JENKINS_NAME)

...

'''

}

}

stages {

stage('Run integration test') {

environment {

AMQ_USER = 'test'

AMQ_PASSWORD = 'secret'

}

steps {

dir ("integration-test") {

container('mariadb') {

sh 'sql/setup.sh'

}

sh 'mockserver/setup.sh'

//Run the tests.

//Somehow simply "mvn ..." doesn't work here

sh '/bin/bash -c "mvn -s ../configuration/settings.xml -B clean test"'

}

}

post {

always {

junit testResults: 'integration-test/target/surefire-reports/*.xml', allowEmptyResults: true

}

}

}

}

}Setting a different agent for each stage also is possible, as in kubernetes-integration-test/Jenkinsfile-declarative.

Try it on Minishift

If you'd like to try the solution described above, you'll need access to a Kubernetes cluster. At Red Hat, we use OpenShift, which is an enterprise-ready version of Kubernetes. There are several ways to have access to a full-scale cluster:

- OpenShift Container Platform on your own infrastructure

- OpenShift Dedicated cluster hosted on a public cloud

- OpenShift Online on-demand public environment

Running a small one-node cluster on your local machine also is possible, which is probably the easiest way to try things. Let's see how to set up Red Hat CDK (or Minikube) to run our tests.

After downloading Red Hat CDK, prepare the Minishift environment:

- Run setup:

minishift setup-cdk - Set the internal Docker registry as insecure:

minishift config set insecure-registry 172.30.0.0/16This is needed because the kubernetes-plugin is pulling the image directly from the internal registry, which is not HTTPS. - Start the Minishift virtual machine (use your free Red Hat account):

minishift --username me@mymail.com --password ... --memory 4GB start - Note the console URL (or you can get it by entering:

minishift console --url) - Add the

octool to the path:eval $(minishift oc-env) - Log in to OpenShift API (admin/admin):

oc login https://192.168.42.84:8443

Start a Jenkins master within the cluster using the template available:

oc new-app --template=jenkins-persistent -p MEMORY_LIMIT=1024Mi

Once Jenkins is up, it should be available via a route created by the template (e.g., https://jenkins-myproject.192.168.42.84.nip.io). Login is integrated with OpenShift (admin/admin).

Create a new Pipeline project that takes the Pipeline script from SCM pointing to a Git repository (e.g., kubernetes-integration-test.git) having the Jenkinsfile to execute. Then simply Build Now.

The first run takes longer, as images are downloaded from the Docker registries. If everything goes well, we can see the test execution on the Jenkins build's Console Output. The dynamically created pods can be seen on the OpenShift Console under My Project / Pods.

If something goes wrong, try to investigate by looking at:

- Jenkins build output

- Jenkins master pod log

- Jenkins kubernetes-plugin configuration

- Events of created pods (Maven or integration-test)

- Log of created pods

If you'd like to make additional executions quicker, you can use a volume as a local Maven repository so Maven doesn't have to download dependencies every time. Create a PersistentVolumeClaim:

# oc create -f - <<EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: mavenlocalrepo

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

EOFAdd the volume to the podTemplate (and optionally the Maven template in kubernetes-plugin). See kubernetes-integration-test/Jenkinsfile-mavenlocalrepo:

volumes: [

persistentVolumeClaim( mountPath: '/home/jenkins/.m2/repository',

claimName: 'mavenlocalrepo')

]Note that Maven local repositories claim to be "non-thread safe" and should not be used by multiple builds at the same time. We use a ReadWriteOnce claim here that will be mounted to only one pod at a time.

The jenkins-2-rhel7:v3.9 image has kubernetes-plugin v1.2 installed. To run the Jenkinsfile-declarative and Jenkinsfile-yaml examples, you need to update the plugin in Jenkins to v1.7+.

To completely clean up after stopping Minishift, delete the ~/.minishift directory.

Limitations

Each project is different, so it's important to understand the impact of the following limitations and factors on your case:

- Using the

jenkins-kubernetes-pluginto create the test environment is independent from the integration test itself. The tests can be written using any language and executed with any test framework—which is a great power but also a great responsibility. - The whole test pod is created before the test execution and shut down afterward. There is no solution provided here to manage the containers during test execution. It's possible to split up your tests into different stages with different pod templates, but that adds a lot of complexity.

- The containers start before the first pipeline steps are executed. Files from the integration test project are not accessible at that point, so we can't run prepare scripts or provide configuration files for those processes.

- All containers belong to the same pod, so they must run on the same node. If we need many containers and the pod requires too many resources, there may be no node available to run the pod.

- The size and scale of the integration test environment should be kept low. Though it's possible to start up multiple microservices and run end-to-end tests within one pod, the number of required containers can quickly increase. This environment is also not ideal to test high availability and scalability requirements.

- The test pod is re-created for each execution, but the state of the containers is still kept during its run. This means that the individual test cases are not independent from each other. It's the test project's responsibility to do some cleanup between them if needed.

Summary

Running integration tests in an environment created dynamically from code is relatively easy using Jenkins pipeline and the kubernetes-plugin. We just need a Kubernetes cluster and some experience with containers. Fortunately, more and more platforms provide official Docker images on one of the public registries. In the worst-case scenario, we have to build some ourselves. The hustle of preparing the pipeline and integration tests pays you back quickly, especially if you want to try different configurations or dependency version upgrades during your application's lifecycle.

This was originally published on Medium and is reprinted with permission.

1 Comment