Kubernetes is an open source container-orchestration system for automating deployment, scaling, and management of containerized applications. If you've played around with it or OpenShift (which is an enterprise-ready version of Kubernetes in production), the following questions may have occurred to you:

-

Does OpenShift support running applications at scale?

-

What are the cluster limits?

-

How can we tune a cluster to get maximum performance?

-

What are the challenges of running and maintaining a large and/or dense cluster?

Red Hat's performance and scalability team created an automation pipeline and tooling to help answer these and other questions.

Infrastructure

It's one of the key things needed to test any product at scale. Our scale lab includes 350+ machines with 8,000+ cores to enable us to test products at their upper limits. Even though our lab already has a lot of resources, we keep adding more and more machines every year. All the resources are shared and available on demand.

Cluster availability

We usually install (at minimum) a 2,000-node cluster for every OpenShift release. This supports the cluster-limits tests in our pipeline and enables us to push scalability to find new limits.

We consistently maintain a 250-node cluster to resolve customer issues, design new tests for new upstream features, and do R&D.

Automation pipeline and tooling

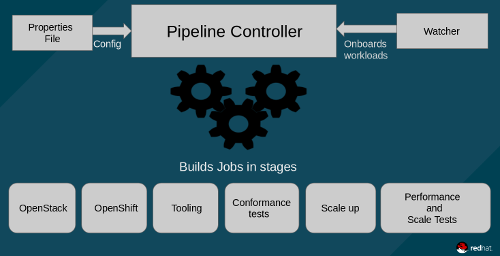

Our pipeline runs in stages; each stage is an individual Jenkins job, and all the stages are tied together by the pipeline controller. Components in the pipeline are:

-

Jenkinsfile: Loads the pipeline-build scripts in stages

-

Jenkins Job Builder (JJB) template: A YAML definition of the Jenkins job

-

Watcher: Looks for new JJB templates or updates to the existing templates and creates/updates the jobs in Jenkins

-

Pipeline controller: Ties up all the jobs, including cluster installation/configuration and running various performance and scale tests

-

Property files: Placeholders for job parameters

-

Build scripts: Reads the properties files and calls the responsible jobs in the pipeline using the parameters

Pipeline jobs

Given a properties file that contains the parameters needed to build the jobs in the pipeline, the pipeline controller will run the following jobs:

Image Provisioner

The Image Provisioner job watches for new OpenShift code bits and builds Amazon machine images (AMIs) and qcow images with our tooling along with various packages, configurations, and container images baked into it. This reduces the time the installer needs to build out an OpenShift cluster by fetching packages or images over the network.

Image Validator

Once the image is ready, the Image Validator checks whether the image is valid by installing an all-in-one node OpenShift cluster. This is used as the base image for the OpenShift cluster.

OpenStack

This job installs OpenStack on the hardware. OpenStack provides the virtualization platform for scaling out the OpenShift cluster to a higher node count. By using virtual machines, we can conserve lab resources and run large-scale tests on a smaller hardware footprint.

OpenShift

This job installs OpenShift on top of OpenStack. It builds what we call a core cluster: a bare-minimum cluster with three Master/etcd nodes, three Infra nodes, three Storage nodes, and two Application/Worker nodes. We make sure the core nodes don't land on the same hypervisor for the cluster to be a true high-availability (HA) and for the performance- and scale-test results to be valid. We use NVMe pass-throughs for most of the core nodes, because etcd running on Master nodes, Elasticsearch running on Infra nodes, and Red Hat OpenShift Container Storage running on Storage nodes need high throughput and low latency for better performance.

Conformance

This job runs the Kubernetes end-to-end tests to check the cluster's sanity.

Scaleup

This job scales out the cluster to a desired node count once it gets a green signal from the conformance job. We don't let the OpenShift job create the 2,000-node cluster in one go in case we find functional problems in it after running end-to-end tests; that would require us to tear down the entire cluster and rebuilt it—which is far more time-consuming than tearing down an 11-node cluster. The OpenShift installer is Ansible-based, so it's recommended to set the number of forks appropriately to enable the Scaleup process to be faster as it runs tasks in parallel.

Performance and scale tests

We designed tests to look at the performance of the control plane, kubelet, networking, HAProxy, and storage. The pipeline includes many tests to validate and push towards higher cluster limits. We run the pipeline once every sprint with the latest OpenShift bits and look at the performance regressions.

The pipeline's purpose

We built this pipeline to enable teams across Red Hat to test application workloads at scale. Advantages of using our automation pipeline and tooling include:

-

Eliminates the need to have a large infrastructure

-

Enables teams to reuse our tooling to analyze the performance and scalability of OpenShift rather than spending resources on developing new tooling

-

Prevents the need to build and maintain a large-scale OpenShift cluster with the latest and greatest bits

How to onboard workloads into the pipeline

We store all our job templates and scripts in a public GitHub repo, and adding a new workload is as simple as submitting a pull request with the right templates. The Watcher component takes care of creating the job and adding it to the pipeline; then we test the workload along with our test stages, which are part of the pipeline. Users can expect to receive test results once a sprint completes, after which we refresh the cluster with the new OpenShift bits.

Sebastian Jug & Naga Ravi Chaitanya Elluri presented Automated Kubernetes Scalability Testing at KubeCon + CloudNativeCon North America, December 10-13 in Seattle.

Comments are closed.