When I joined the cloud ops team, responsible for cloud operations and engineering process streamlining, at WorkSafeBC, I shared my dream for one instrumented pipeline, with one continuous integration build and continuous deliveries for every product.

According to Lukas Klose, flow (within the context of software engineering) is "the state of when a system produces value at a steady and predictable rate." I think it is one of the greatest challenges and opportunities, especially in the complex domain of emergent solutions. Strive towards a continuous and incremental delivery model with consistent, efficient, and quality solutions, building the right things and delighting our users. Find ways to break down our systems into smaller pieces that are valuable on their own, enabling teams to deliver value incrementally. This requires a change of mindset for both business and engineering.

Continuous integration and delivery (CI/CD) pipeline

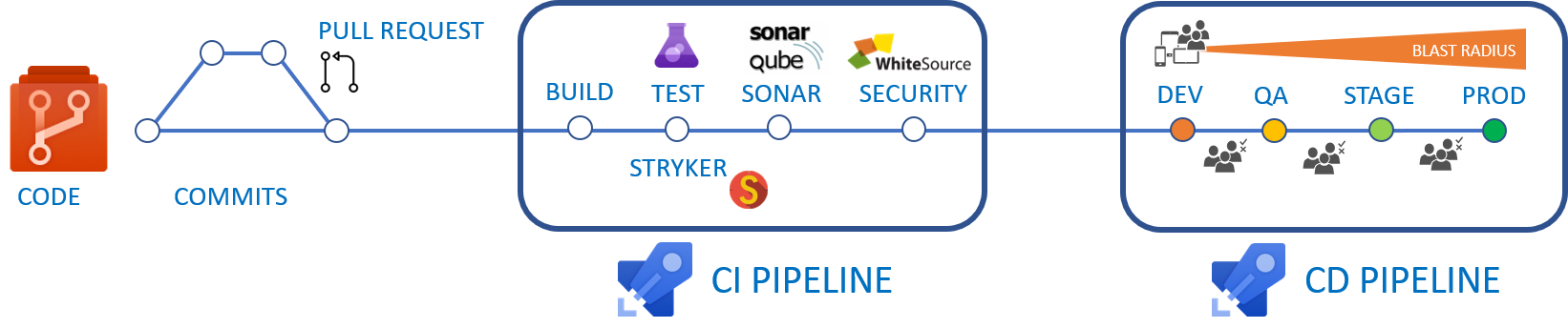

The CI/CD pipeline is a DevOps practice for delivering code changes more often, consistently, and reliably. It enables agile teams to increase deployment frequency and decrease lead time for change, change-failure rate, and mean time to recovery key performance indicators (KPIs), thereby improving quality and delivering value faster. The only prerequisites are a solid development process, a mindset for quality and accountability for features from ideation to deprecation, and a comprehensive pipeline (as illustrated below).

It streamlines the engineering process and products to stabilize infrastructure environments; optimize flow; and create consistent, repeatable, and automated tasks. This enables us to turn complex tasks into complicated tasks, as outlined by Dave Snowden's Cynefin Sensemaking model, reducing maintenance costs and increasing quality and reliability.

Part of streamlining our flow is to minimize waste for the wasteful practice types Muri (overloaded), Mura (variation), and Muda (waste).

- Muri: avoid over-engineering, features that do not link to business value, and excessive documentation

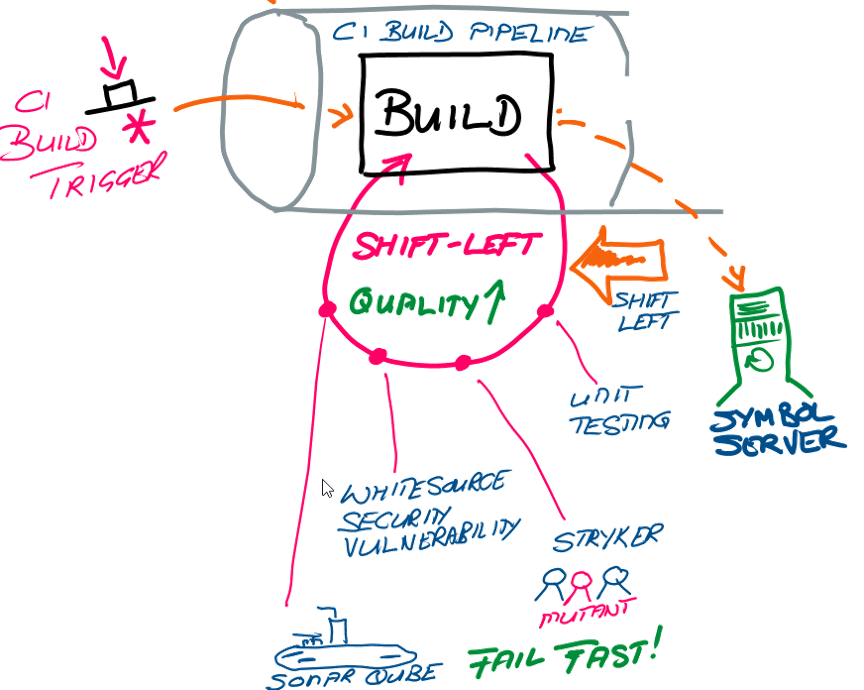

- Mura: improve approval and validation processes (e.g., security signoffs); drive the shift-left initiative to push unit testing, security vulnerability scanning, and code quality inspection; and improve risk assessment

- Muda: avoid waste such as technical debt, bugs, and upfront, detailed documentation

It appears that 80% of the focus and intention is on products that provide an integrated and collaborative engineering system that can take an idea and plan, develop, test, and monitor your solutions. However, a successful transformation and engineering system is only 5% about products, 15% about process, and 80% about people.

There are many products at our disposal. For example, Azure DevOps offers rich support for continuous integration (CI), continuous delivery (CD), extensibility, and integration with open source and commercial off-the-shelve (COTS) software as a service (SaaS) solutions such as Stryker, SonarQube, WhiteSource, Jenkins, and Octopus. For engineers, it is always a temptation to focus on products, but remember that they are only 5% of our journey.

The biggest challenge is breaking down a process based on decades of rules, regulations, and frustrating areas of comfort: "It is how we have always done it; why change?"

The friction between people in development and operation results in a variety of fragmented, duplicated, and incessant integration and delivery pipelines. Development wants access to everything, to iterate continuously, to enable users, and to release continuously and fast. Operations wants to lock down everything to protect the business and users and drive quality. This inadvertently and often entails processes and governance that are hard to automate, which results in slower-than-expected release cycles.

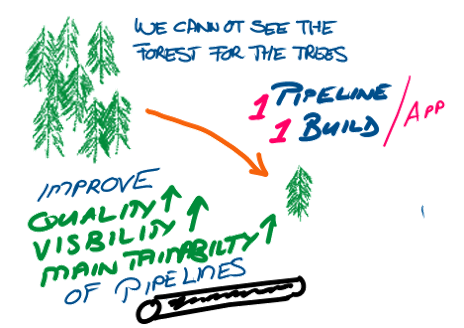

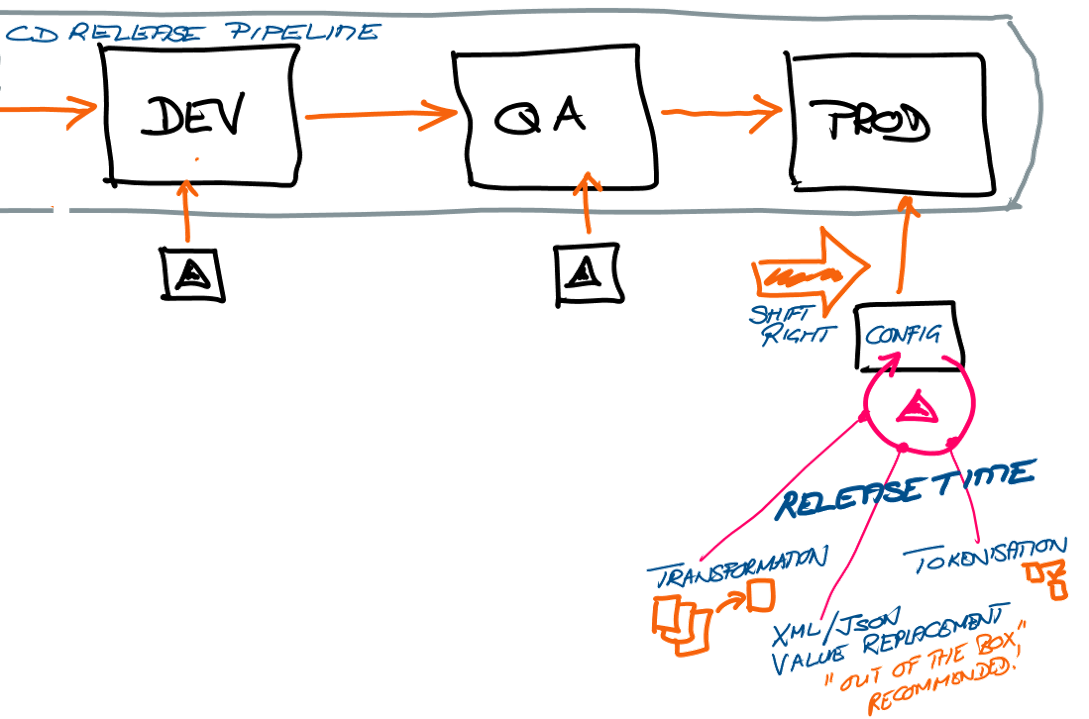

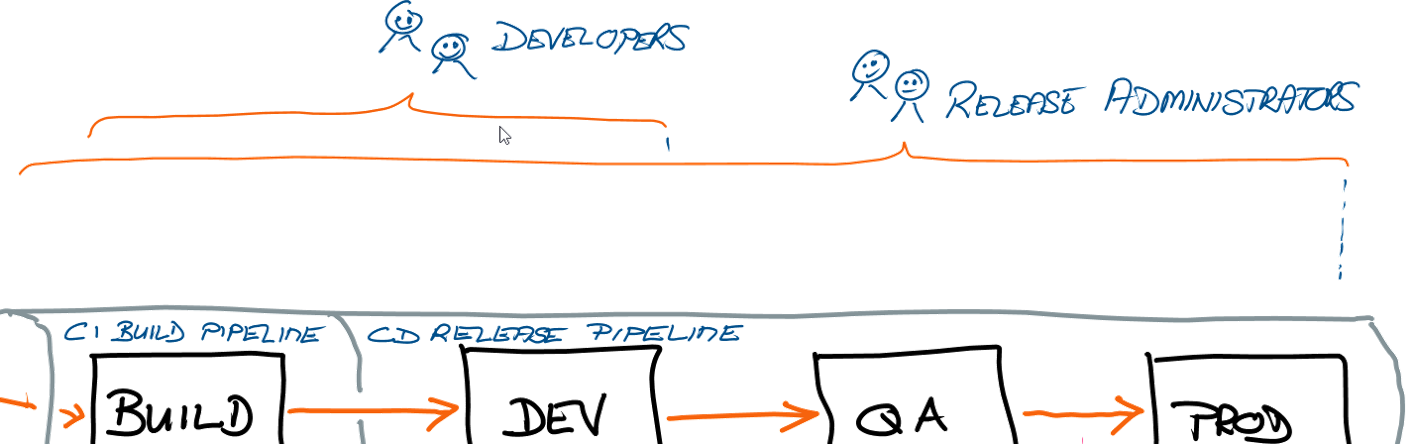

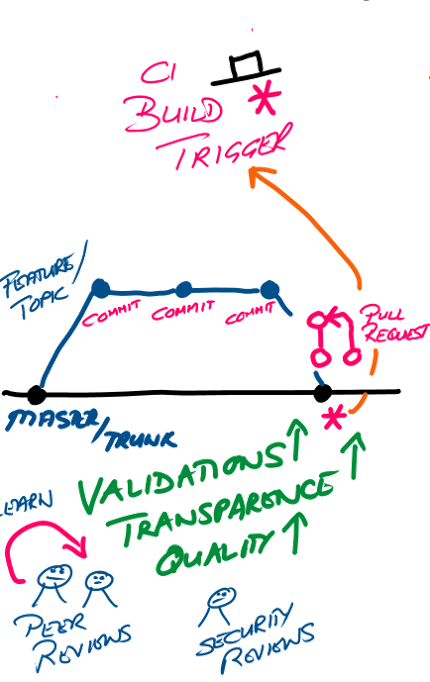

Let us explore the pipeline with snippets from a recent whiteboard discussion.

The variation of pipelines is difficult and costly to support; the inconsistency of versioning and traceability complicates live site incidents, and continuous streamlining of the development process and pipelines is a challenge.

I advocate a few principles that enable one universal pipeline per product:

- Automate everything automatable

- Build once

- Maintain continuous integration and delivery

- Maintain continuous streamlining and improvement

- Maintain one build definition

- Maintain one release pipeline definition

- Scan for vulnerabilities early and often, and fail fast

- Test early and often, and fail fast

- Maintain traceability and observability of releases

If I poke the hornet's nest, however, the most important principle is to keep it simple. If you cannot explain the reason (what, why) and the process (how) of your pipelines, you do not understand your engineering process. Most of us are not looking for the best, ultramodern, and revolutionary pipeline—we need one that is functional, valuable, and an enabler for engineering. Tackle the 80%—the culture, people, and their mindset—first. Ask your CI/CD knights in shining armor, with their TLA (two/three-lettered acronym) symbols on their shield, to join the might of practical and empirical engineering.

Unified pipeline

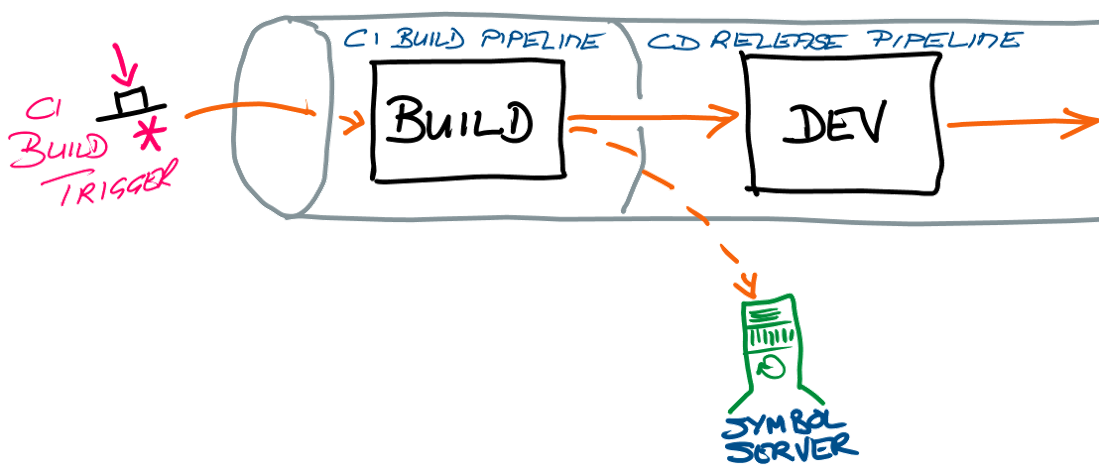

Let us walk through one of our design practice whiteboard sessions.

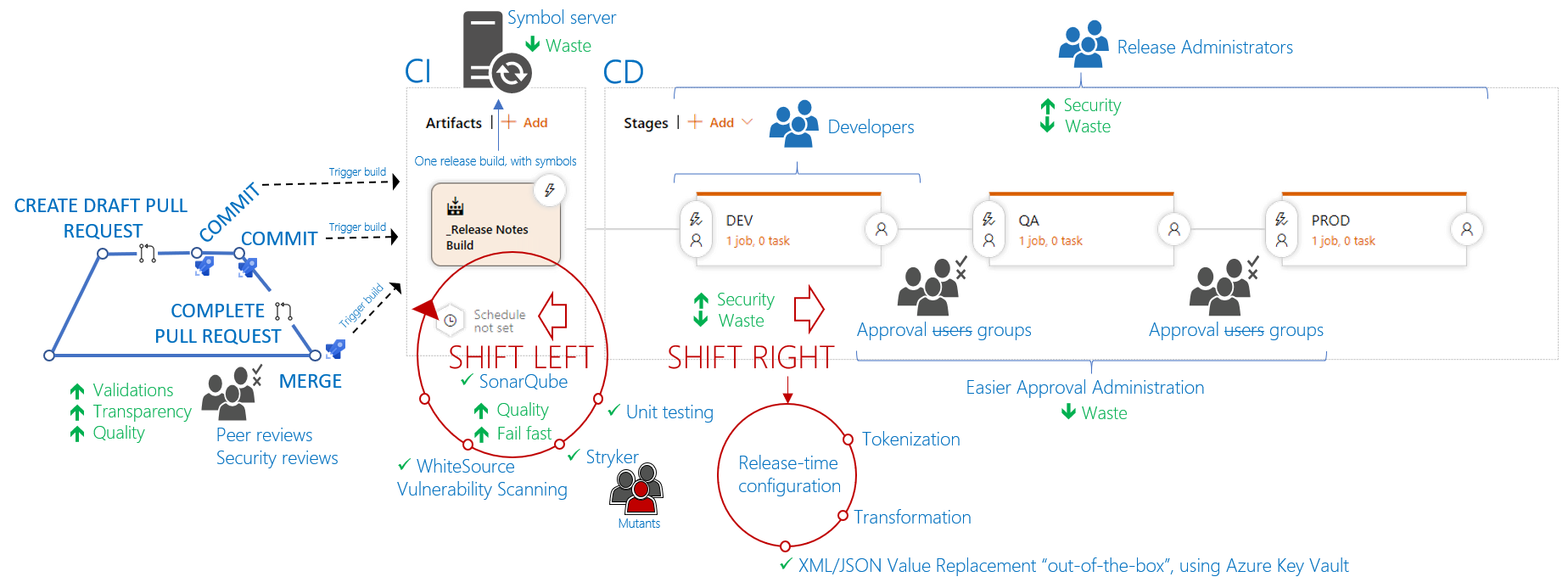

Define one CI/CD pipeline with one build definition per application that is used to trigger pull-request pre-merge validation and continuous integration builds. Generate a release build with debug information and upload to the Symbol Server. This enables developers to debug locally and remotely in production without having to worry which build and symbols they need to load—the symbol server performs that magic for us.

Perform as many validations as possible in the build—shift left—allowing feature teams to fail fast, continuously raise the overall product quality, and include invaluable evidence for the reviewers with every pull request. Do you prefer a pull request with a gazillion commits? Or a pull request with a couple of commits and supporting evidence such as security vulnerabilities, test coverage, code quality, and Stryker mutant remnants? Personally, I vote for the latter.

Do not use build transformation to generate multiple, environment-specific builds. Create one build and perform release-time transformation, tokenization, and/or XML/JSON value replacement. In other words, shift-right the environment-specific configuration.

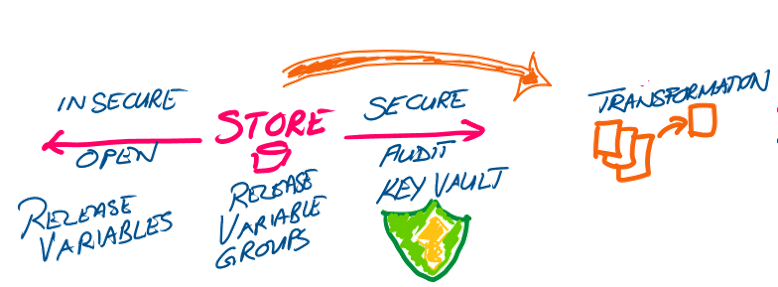

Securely store release configuration data and make it available to both Dev and Ops teams based on the level of trust and sensitivity of the data. Use the open source Key Manager, Azure Key Vault, AWS Key Management Service, or one of many other products—remember, there are many hammers in your toolkit!

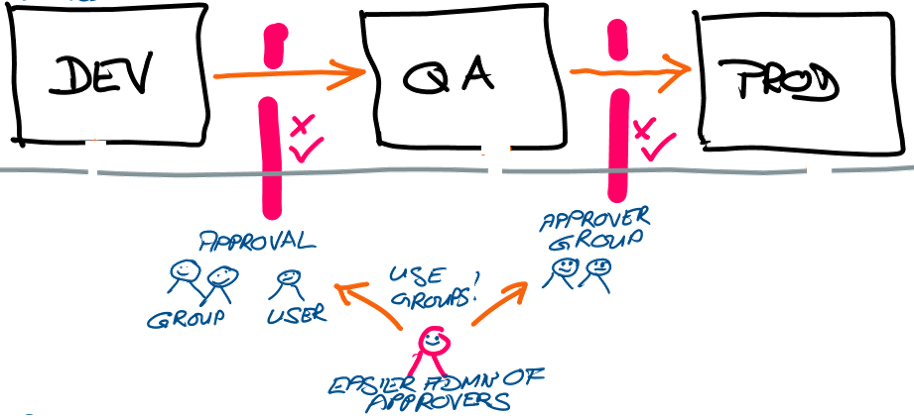

Use groups instead of users to move approver management from multiple stages across multiple pipelines to simple group membership.

Instead of duplicating pipelines to give teams access to their areas of interest, create one pipeline and grant access to specific stages of the delivery environments.

Last, but not least, embrace pull requests to help raise insight and transparency into your codebase, improve the overall quality, collaborate, and release pre-validation builds into selected environments; e.g., the Dev environment.

Here is a more formal view of the whole whiteboard sketch.

So, what are your thoughts and learnings with CI/CD pipelines? Is my dream of one pipeline to rule them all a pipe dream?

1 Comment