Recent advancements in deep learning algorithms and hardware performance have enabled researchers and companies to make giant strides in areas such as image recognition, speech recognition, recommendation engines, and machine translation. Six years ago, the first superhuman performance in visual pattern recognition was achieved. Two years ago, the Google Brain team unleashed TensorFlow, deftly slinging applied deep learning to the masses. TensorFlow is outpacing many complex tools used for deep learning.

With TensorFlow, you'll gain access to complex features with vast power. The keystone of its power is TensorFlow's ease of use.

In a two-part series, I'll explain how to quickly create a convolutional neural network for practical image recognition. The computation steps are embarrassingly parallel and can be deployed to perform frame-by-frame video analysis and extended for temporal-aware video analysis.

This series cuts directly to the most compelling material. A basic understanding of the command line and Python is all you need to play along from home. It aims to get you started quickly and inspire you to create your own amazing projects. I won't dive into the depths of how TensorFlow works, but I'll provide plenty of additional references if you're hungry for more. All the libraries and tools in this series are free/libre/open source software.

How it works

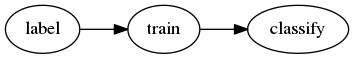

Our goal in this tutorial is to take a novel image that falls into a category we've trained and run it through a command that will tell us in which category the image fits. We'll follow these steps:

opensource.com

- Labeling is the process of curating training data. For flowers, images of daisies are dragged into the "daisies" folder, roses into the "roses" folder, and so on, for as many different flowers as desired. If we never label ferns, the classifier will never return "ferns." This requires many examples of each type, so it is an important and time-consuming process. (We will use pre-labeled data to start, which will make this much quicker.)

- Training is when we feed the labeled data (images) to the model. A tool will grab a random batch of images, use the model to guess what type of flower is in each, test the accuracy of the guesses, and repeat until most of the training data is used. The last batch of unused images is used to calculate the accuracy of the trained model.

- Classification is using the model on novel images. For example, input:

IMG207.JPG, output:daisies. This is the fastest and easiest step and is cheap to scale.

Training and classification

In this tutorial, we'll train an image classifier to recognize different types of flowers. Deep learning requires a lot of training data, so we'll need lots of sorted flower images. Thankfully, another kind soul has done an awesome job of collecting and sorting images, so we'll use this sorted data set with a clever script that will take an existing, fully trained image classification model and retrain the last layers of the model to do just what we want. This technique is called transfer learning.

The model we're retraining is called Inception v3, originally specified in the December 2015 paper "Rethinking the Inception Architecture for Computer Vision."

Inception doesn't know how to tell a tulip from a daisy until we do this training, which takes about 20 minutes. This is the "learning" part of deep learning.

Installation

Step one to machine sentience: Install Docker on your platform of choice.

The first and only dependency is Docker. This is the case in many TensorFlow tutorials (which should indicate this is a reasonable way to start). I also prefer this method of installing TensorFlow because it keeps your host (laptop or desktop) clean by not installing a bunch of dependencies.

Bootstrap TensorFlow

With Docker installed, we're ready to fire up a TensorFlow container for training and classification. Create a working directory somewhere on your hard drive with 2 gigabytes of free space. Create a subdirectory called local and note the full path to that directory.

docker run -v /path/to/local:/notebooks/local --rm -it --name tensorflow

tensorflow/tensorflow:nightly /bin/bashHere's a breakdown of that command.

-v /path/to/local:/notebooks/localmounts thelocaldirectory you just created to a convenient place in the container. If using RHEL, Fedora, or another SELinux-enabled system, append:Zto this to allow the container to access the directory.--rmtells Docker to delete the container when we're done.-itattaches our input and output to make the container interactive.--name tensorflowgives our container the nametensorflowinstead ofsneaky_chowderheador whatever random name Docker might pick for us.tensorflow/tensorflow:nightlysays run thenightlyimage oftensorflow/tensorflowfrom Docker Hub (a public image repository) instead of latest (by default, the most recently built/available image). We are using nightly instead of latest because (at the time of writing) latest contains a bug that breaks TensorBoard, a data visualization tool we'll find handy later./bin/bashsays don't run the default command; run a Bash shell instead.

Train the model

Inside the container, run these commands to download and sanity check the training data.

curl -O http://download.tensorflow.org/example_images/flower_photos.tgz

echo 'db6b71d5d3afff90302ee17fd1fefc11d57f243f flower_photos.tgz' | sha1sum -cIf you don't see the message flower_photos.tgz: OK, you don't have the correct file. If the above curl or sha1sum steps fail, manually download and explode the training data tarball (SHA-1 checksum: db6b71d5d3afff90302ee17fd1fefc11d57f243f) in the local directory on your host.

Now put the training data in place, then download and sanity check the retraining script.

mv flower_photos.tgz local/

cd local

curl -O https://raw.githubusercontent.com/tensorflow/tensorflow/10cf65b48e1b2f16eaa826d2793cb67207a085d0/tensorflow/examples/image_retraining/retrain.py

echo 'a74361beb4f763dc2d0101cfe87b672ceae6e2f5 retrain.py' | sha1sum -cLook for confirmation that retrain.py has the correct contents. You should see retrain.py: OK.

Finally, it's time to learn! Run the retraining script.

python retrain.py --image_dir flower_photos --output_graph output_graph.pb --output_labels output_labels.txtIf you encounter this error, ignore it:

TypeError: not all arguments converted during string formatting Logged from file.

tf_logging.py, line 82

As retrain.py proceeds, the training images are automatically separated into batches of training, test, and validation data sets.

In the output, we're hoping for high "Train accuracy" and "Validation accuracy" and low "Cross entropy." See How to retrain Inception's final layer for new categories for a detailed explanation of these terms. Expect training to take around 30 minutes on modern hardware.

Pay attention to the last line of output in your console:

INFO:tensorflow:Final test accuracy = 89.1% (N=340)This says we've got a model that will, nine times out of 10, correctly guess which one of five possible flower types is shown in a given image. Your accuracy will likely differ because of randomness injected into the training process.

Classify

With one more small script, we can feed new flower images to the model and it'll output its guesses. This is image classification.

Save the following as classify.py in the local directory on your host:

import tensorflow as tf, sys

image_path = sys.argv[1]

graph_path = 'output_graph.pb'

labels_path = 'output_labels.txt'

# Read in the image_data

image_data = tf.gfile.FastGFile(image_path, 'rb').read()

# Loads label file, strips off carriage return

label_lines = [line.rstrip() for line

in tf.gfile.GFile(labels_path)]

# Unpersists graph from file

with tf.gfile.FastGFile(graph_path, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

_ = tf.import_graph_def(graph_def, name='')

# Feed the image_data as input to the graph and get first prediction

with tf.Session() as sess:

softmax_tensor = sess.graph.get_tensor_by_name('final_result:0')

predictions = sess.run(softmax_tensor,

{'DecodeJpeg/contents:0': image_data})

# Sort to show labels of first prediction in order of confidence

top_k = predictions[0].argsort()[-len(predictions[0]):][::-1]

for node_id in top_k:

human_string = label_lines[node_id]

score = predictions[0][node_id]

print('%s (score = %.5f)' % (human_string, score))To test your own image, save it as test.jpg in your local directory and run (in the container) python classify.py test.jpg. The output will look something like this:

sunflowers (score = 0.78311)

daisy (score = 0.20722)

dandelion (score = 0.00605)

tulips (score = 0.00289)

roses (score = 0.00073)The numbers indicate confidence. The model is 78.311% sure the flower in the image is a sunflower. A higher score indicates a more likely match. Note that there can be only one match. Multi-label classification requires a different approach.

For more detail, view this great line-by-line explanation of classify.py.

The graph loading code in the classifier script was broken, so I applied the graph_def = tf.GraphDef(), etc. graph loading code.

With zero rocket science and a handful of code, we've created a decent flower image classifier that can process about five images per second on an off-the-shelf laptop computer.

In the second part of this series, which publishes next week, we'll use this information to train a different image classifier, then take a look under the hood with TensorBoard. If you want to try out TensorBoard, keep this container running by making sure docker run isn't terminated.

15 Comments