While languages like Python and R are increasingly popular for data science, C and C++ can be a strong choice for efficient and effective data science. In this article, we will use C99 and C++11 to write a program that uses the Anscombe’s quartet dataset, which I'll explain about next.

I wrote about my motivation for continually learning languages in an article covering Python and GNU Octave, which is worth reviewing. All of the programs are meant to be run on the command line, not with a graphical user interface (GUI). The full examples are available in the polyglot_fit repository.

The programming task

The program you will write in this series:

- Reads data from a CSV file

- Interpolates the data with a straight line (i.e., f(x)=m ⋅ x + q)

- Plots the result to an image file

This is a common situation that many data scientists have encountered. The example data is the first set of Anscombe's quartet, shown in the table below. This is a set of artificially constructed data that gives the same results when fitted with a straight line, but their plots are very different. The data file is a text file with tabs as column separators and a few lines as a header. This task will use only the first set (i.e., the first two columns).

| I | II | III | IV | ||||

|---|---|---|---|---|---|---|---|

| x | y | x | y | x | y | x | y |

| 10.0 | 8.04 | 10.0 | 9.14 | 10.0 | 7.46 | 8.0 | 6.58 |

| 8.0 | 6.95 | 8.0 | 8.14 | 8.0 | 6.77 | 8.0 | 5.76 |

| 13.0 | 7.58 | 13.0 | 8.74 | 13.0 | 12.74 | 8.0 | 7.71 |

| 9.0 | 8.81 | 9.0 | 8.77 | 9.0 | 7.11 | 8.0 | 8.84 |

| 11.0 | 8.33 | 11.0 | 9.26 | 11.0 | 7.81 | 8.0 | 8.47 |

| 14.0 | 9.96 | 14.0 | 8.10 | 14.0 | 8.84 | 8.0 | 7.04 |

| 6.0 | 7.24 | 6.0 | 6.13 | 6.0 | 6.08 | 8.0 | 5.25 |

| 4.0 | 4.26 | 4.0 | 3.10 | 4.0 | 5.39 | 19.0 | 12.50 |

| 12.0 | 10.84 | 12.0 | 9.13 | 12.0 | 8.15 | 8.0 | 5.56 |

| 7.0 | 4.82 | 7.0 | 7.26 | 7.0 | 6.42 | 8.0 | 7.91 |

| 5.0 | 5.68 | 5.0 | 4.74 | 5.0 | 5.73 | 8.0 | 6.89 |

The C way

C is a general-purpose programming language that is among the most popular languages in use today (according to data from the TIOBE Index, RedMonk Programming Language Rankings, Popularity of Programming Language Index, and State of the Octoverse of GitHub). It is a quite old language (circa 1973), and many successful programs were written in it (e.g., the Linux kernel and Git to name just two). It is also one of the closest languages to the inner workings of the computer, as it is used to manipulate memory directly. It is a compiled language; therefore, the source code has to be translated by a compiler into machine code. Its standard library is small and light on features, so other libraries have been developed to provide missing functionalities.

It is the language I use the most for number crunching, mostly because of its performance. I find it rather tedious to use, as it needs a lot of boilerplate code, but it is well supported in various environments. The C99 standard is a recent revision that adds some nifty features and is well supported by compilers.

I will cover the necessary background of C and C++ programming along the way so both beginners and advanced users can follow along.

Installation

To develop with C99, you need a compiler. I normally use Clang, but GCC is another valid open source compiler. For linear fitting, I chose to use the GNU Scientific Library. For plotting, I could not find any sensible library, and therefore this program relies on an external program: Gnuplot. The example also uses a dynamic data structure to store data, which is defined in the Berkeley Software Distribution (BSD).

Installing in Fedora is as easy as running:

sudo dnf install clang gnuplot gsl gsl-develCommenting code

In C99, comments are formatted by putting // at the beginning of the line, and the rest of the line will be discarded by the interpreter. Alternatively, anything between /* and */ is discarded, as well.

// This is a comment ignored by the interpreter.

/* Also this is ignored */Necessary libraries

Libraries are composed of two parts:

- A header file that contains a description of the functions

- A source file that contains the functions' definitions

Header files are included in the source, while the libraries' sources are linked against the executable. Therefore, the header files needed for this example are:

// Input/Output utilities

#include <stdio.h>

// The standard library

#include <stdlib.h>

// String manipulation utilities

#include <string.h>

// BSD queue

#include <sys/queue.h>

// GSL scientific utilities

#include <gsl/gsl_fit.h>

#include <gsl/gsl_statistics_double.h>Main function

In C, the program must be inside a special function called main():

int main(void) {

...

}This differs from Python, as covered in the last tutorial, which will run whatever code it finds in the source files.

Defining variables

In C, variables have to be declared before they are used, and they have to be associated with a type. Whenever you want to use a variable, you have to decide what kind of data to store in it. You can also specify if you intend to use a variable as a constant value, which is not necessary, but the compiler can benefit from this information. From the fitting_C99.c program in the repository:

const char *input_file_name = "anscombe.csv";

const char *delimiter = "\t";

const unsigned int skip_header = 3;

const unsigned int column_x = 0;

const unsigned int column_y = 1;

const char *output_file_name = "fit_C99.csv";

const unsigned int N = 100;Arrays in C are not dynamic, in the sense that their length has to be decided in advance (i.e., before compilation):

int data_array[1024];Since you normally do not know how many data points are in a file, use a singly linked list. This is a dynamic data structure that can grow indefinitely. Luckily, the BSD provides linked lists. Here is an example definition:

struct data_point {

double x;

double y;

SLIST_ENTRY(data_point) entries;

};

SLIST_HEAD(data_list, data_point) head = SLIST_HEAD_INITIALIZER(head);

SLIST_INIT(&head);This example defines a data_point list comprised of structured values that contain both an x value and a y value. The syntax is rather complicated but intuitive, and describing it in detail would be too wordy.

Printing output

To print on the terminal, you can use the printf() function, which works like Octave's printf() function (described in the first article):

printf("#### Anscombe's first set with C99 ####\n");The printf() function does not automatically add a newline at the end of the printed string, so you have to add it. The first argument is a string that can contain format information for the other arguments that can be passed to the function, such as:

printf("Slope: %f\n", slope);Reading data

Now comes the hard part… There are some libraries for CSV file parsing in C, but none seemed stable or popular enough to be in the Fedora packages repository. Instead of adding a dependency for this tutorial, I decided to write this part on my own. Again, going into details would be too wordy, so I will only explain the general idea. Some lines in the source will be ignored for the sake of brevity, but you can find the complete example in the repository.

First, open the input file:

FILE* input_file = fopen(input_file_name, "r");Then read the file line-by-line until there is an error or the file ends:

while (!ferror(input_file) && !feof(input_file)) {

size_t buffer_size = 0;

char *buffer = NULL;

getline(&buffer, &buffer_size, input_file);

...

}The getline() function is a nice recent addition from the POSIX.1-2008 standard. It can read a whole line in a file and take care of allocating the necessary memory. Each line is then split into tokens with the strtok() function. Looping over the token, select the columns that you want:

char *token = strtok(buffer, delimiter);

while (token != NULL)

{

double value;

sscanf(token, "%lf", &value);

if (column == column_x) {

x = value;

} else if (column == column_y) {

y = value;

}

column += 1;

token = strtok(NULL, delimiter);

}Finally, when the x and y values are selected, insert the new data point in the linked list:

struct data_point *datum = malloc(sizeof(struct data_point));

datum->x = x;

datum->y = y;

SLIST_INSERT_HEAD(&head, datum, entries);The malloc() function dynamically allocates (reserves) some persistent memory for the new data point.

Fitting data

The GSL linear fitting function gsl_fit_linear() expects simple arrays for its input. Therefore, since you won't know in advance the size of the arrays you create, you must manually allocate their memory:

const size_t entries_number = row - skip_header - 1;

double *x = malloc(sizeof(double) * entries_number);

double *y = malloc(sizeof(double) * entries_number);Then, loop over the linked list to save the relevant data to the arrays:

SLIST_FOREACH(datum, &head, entries) {

const double current_x = datum->x;

const double current_y = datum->y;

x[i] = current_x;

y[i] = current_y;

i += 1;

}Now that you are done with the linked list, clean it up. Always release the memory that has been manually allocated to prevent a memory leak. Memory leaks are bad, bad, bad. Every time memory is not released, a garden gnome loses its head:

while (!SLIST_EMPTY(&head)) {

struct data_point *datum = SLIST_FIRST(&head);

SLIST_REMOVE_HEAD(&head, entries);

free(datum);

}Finally, finally(!), you can fit your data:

gsl_fit_linear(x, 1, y, 1, entries_number,

&intercept, &slope,

&cov00, &cov01, &cov11, &chi_squared);

const double r_value = gsl_stats_correlation(x, 1, y, 1, entries_number);

printf("Slope: %f\n", slope);

printf("Intercept: %f\n", intercept);

printf("Correlation coefficient: %f\n", r_value);Plotting

You must use an external program for the plotting. Therefore, save the fitting function to an external file:

const double step_x = ((max_x + 1) - (min_x - 1)) / N;

for (unsigned int i = 0; i < N; i += 1) {

const double current_x = (min_x - 1) + step_x * i;

const double current_y = intercept + slope * current_x;

fprintf(output_file, "%f\t%f\n", current_x, current_y);

}The Gnuplot command for plotting both files is:

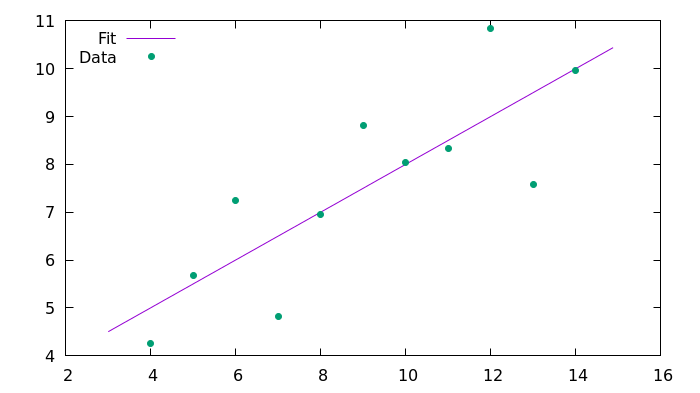

plot 'fit_C99.csv' using 1:2 with lines title 'Fit', 'anscombe.csv' using 1:2 with points pointtype 7 title 'Data'Results

Before running the program, you must compile it:

clang -std=c99 -I/usr/include/ fitting_C99.c -L/usr/lib/ -L/usr/lib64/ -lgsl -lgslcblas -o fitting_C99This command tells the compiler to use the C99 standard, read the fitting_C99.c file, load the libraries gsl and gslcblas, and save the result to fitting_C99. The resulting output on the command line is:

#### Anscombe's first set with C99 ####

Slope: 0.500091

Intercept: 3.000091

Correlation coefficient: 0.816421Here is the resulting image generated with Gnuplot.

The C++11 way

C++ is a general-purpose programming language that is also among the most popular languages in use today. It was created as a successor of C (in 1983) with an emphasis on object-oriented programming (OOP). C++ is commonly regarded as a superset of C, so a C program should be able to be compiled with a C++ compiler. This is not exactly true, as there are some corner cases where they behave differently. In my experience, C++ needs less boilerplate than C, but the syntax is more difficult if you want to develop objects. The C++11 standard is a recent revision that adds some nifty features and is more or less supported by compilers.

Since C++ is largely compatible with C, I will just highlight the differences between the two. If I do not cover a section in this part, it means that it is the same as in C.

Installation

The dependencies for the C++ example are the same as the C example. On Fedora, run:

sudo dnf install clang gnuplot gsl gsl-develNecessary libraries

Libraries work in the same way as in C, but the include directives are slightly different:

#include <cstdlib>

#include <cstring>

#include <iostream>

#include <fstream>

#include <string>

#include <vector>

#include <algorithm>

extern "C" {

#include <gsl/gsl_fit.h>

#include <gsl/gsl_statistics_double.h>

}Since the GSL libraries are written in C, you must inform the compiler about this peculiarity.

Defining variables

C++ supports more data types (classes) than C, such as a string type that has many more features than its C counterpart. Update the definition of the variables accordingly:

const std::string input_file_name("anscombe.csv");For structured objects like strings, you can define the variable without using the = sign.

Printing output

You can use the printf() function, but the cout object is more idiomatic. Use the operator << to indicate the string (or objects) that you want to print with cout:

std::cout << "#### Anscombe's first set with C++11 ####" << std::endl;

...

std::cout << "Slope: " << slope << std::endl;

std::cout << "Intercept: " << intercept << std::endl;

std::cout << "Correlation coefficient: " << r_value << std::endl;Reading data

The scheme is the same as before. The file is opened and read line-by-line, but with a different syntax:

std::ifstream input_file(input_file_name);

while (input_file.good()) {

std::string line;

getline(input_file, line);

...

}The line tokens are extracted with the same function as in the C99 example. Instead of using standard C arrays, use two vectors. Vectors are an extension of C arrays in the C++ standard library that allows dynamic management of memory without explicitly calling malloc():

std::vector<double> x;

std::vector<double> y;

// Adding an element to x and y:

x.emplace_back(value);

y.emplace_back(value);Fitting data

For fitting in C++, you do not have to loop over the list, as vectors are guaranteed to have contiguous memory. You can directly pass to the fitting function the pointers to the vectors buffers:

gsl_fit_linear(x.data(), 1, y.data(), 1, entries_number,

&intercept, &slope,

&cov00, &cov01, &cov11, &chi_squared);

const double r_value = gsl_stats_correlation(x.data(), 1, y.data(), 1, entries_number);

std::cout << "Slope: " << slope << std::endl;

std::cout << "Intercept: " << intercept << std::endl;

std::cout << "Correlation coefficient: " << r_value << std::endl;Plotting

Plotting is done with the same approach as before. Write to a file:

const double step_x = ((max_x + 1) - (min_x - 1)) / N;

for (unsigned int i = 0; i < N; i += 1) {

const double current_x = (min_x - 1) + step_x * i;

const double current_y = intercept + slope * current_x;

output_file << current_x << "\t" << current_y << std::endl;

}

output_file.close();And then use Gnuplot for the plotting.

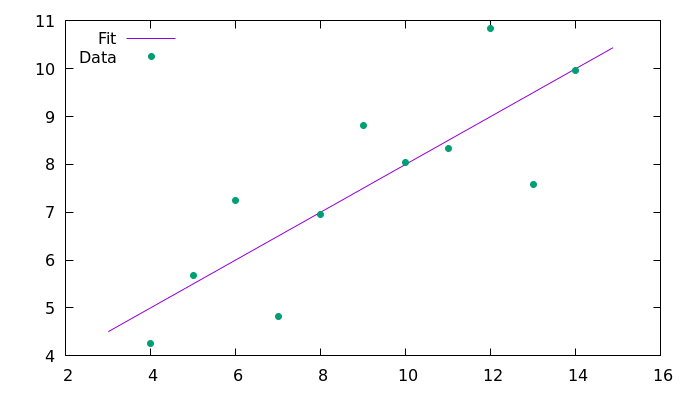

Results

Before running the program, it must be compiled with a similar command:

clang++ -std=c++11 -I/usr/include/ fitting_Cpp11.cpp -L/usr/lib/ -L/usr/lib64/ -lgsl -lgslcblas -o fitting_Cpp11The resulting output on the command line is:

#### Anscombe's first set with C++11 ####

Slope: 0.500091

Intercept: 3.00009

Correlation coefficient: 0.816421And this is the resulting image generated with Gnuplot.

Conclusion

This article provides examples for a data fitting and plotting task in C99 and C++11. Since C++ is largely compatible with C, this article exploited their similarities for writing the second example. In some aspects, C++ is easier to use because it partially relieves the burden of explicitly managing memory. But the syntax is more complex because it introduces the possibility of writing classes for OOP. However, it is still possible to write software in C with the OOP approach. Since OOP is a style of programming, it can be used in any language. There are some great examples of OOP in C, such as the GObject and Jansson libraries.

For number crunching, I prefer working in C99 due to its simpler syntax and widespread support. Until recently, C++11 was not as widely supported, and I tended to avoid the rough edges in the previous versions. For more complex software, C++ could be a good choice.

Do you use C or C++ for data science as well? Share your experiences in the comments.

14 Comments